Introduction

Overview

Teaching: 30 min

Exercises: 10 minQuestions

What is a command shell and why would I use one?

Objectives

Explain how the shell relates to the keyboard, the screen, the operating system, and users’ programs.

Explain when and why command-line interfaces should be used instead of graphical interfaces.

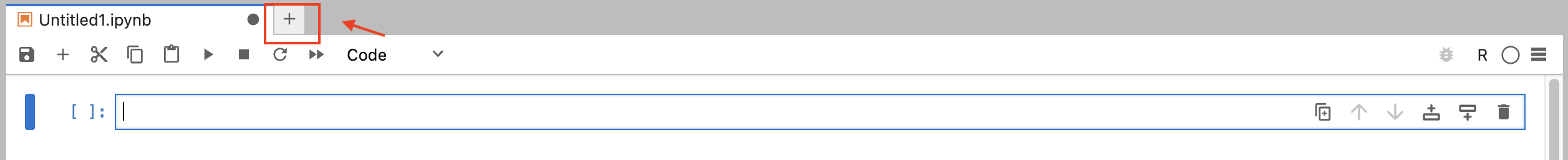

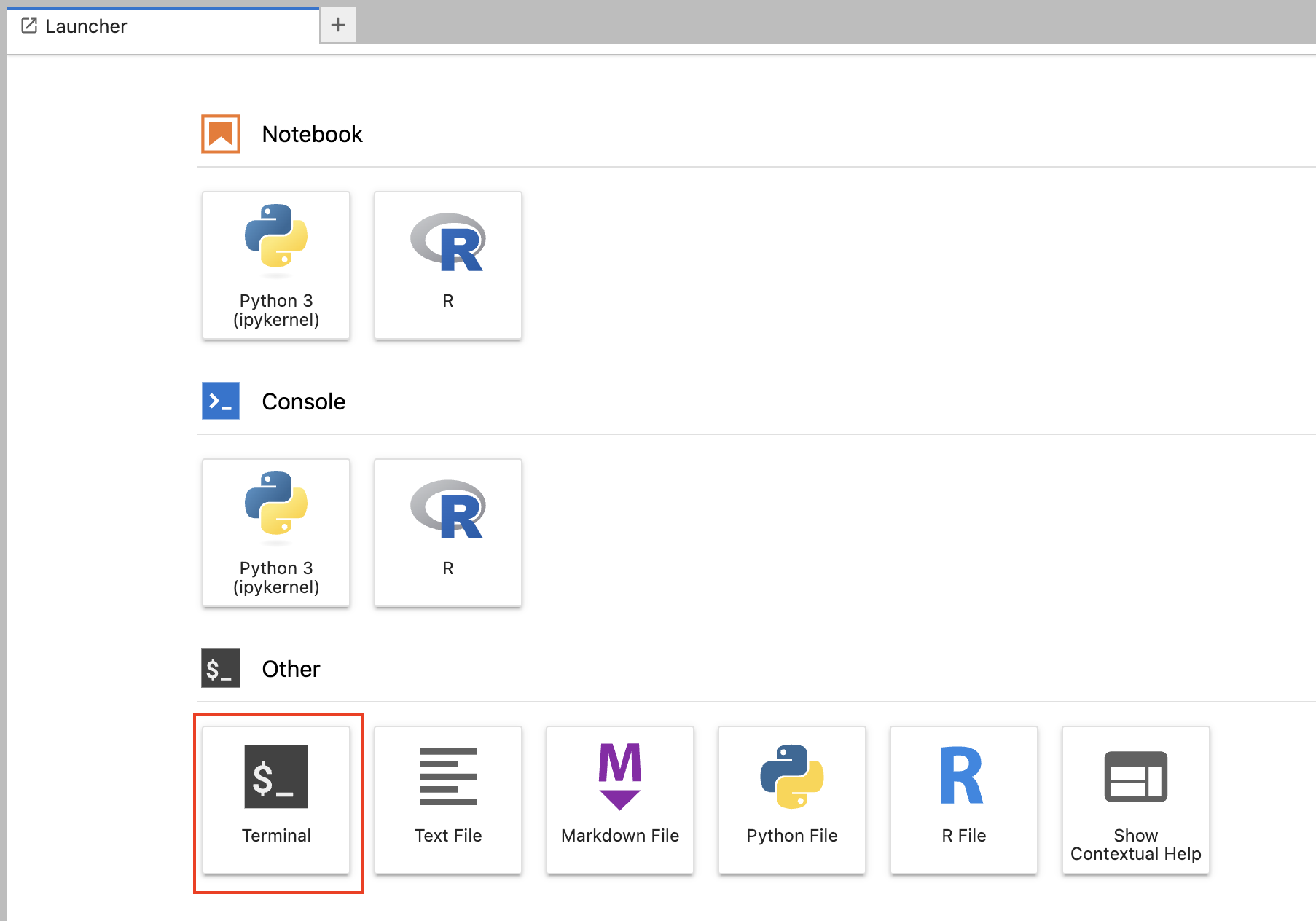

Open your terminal

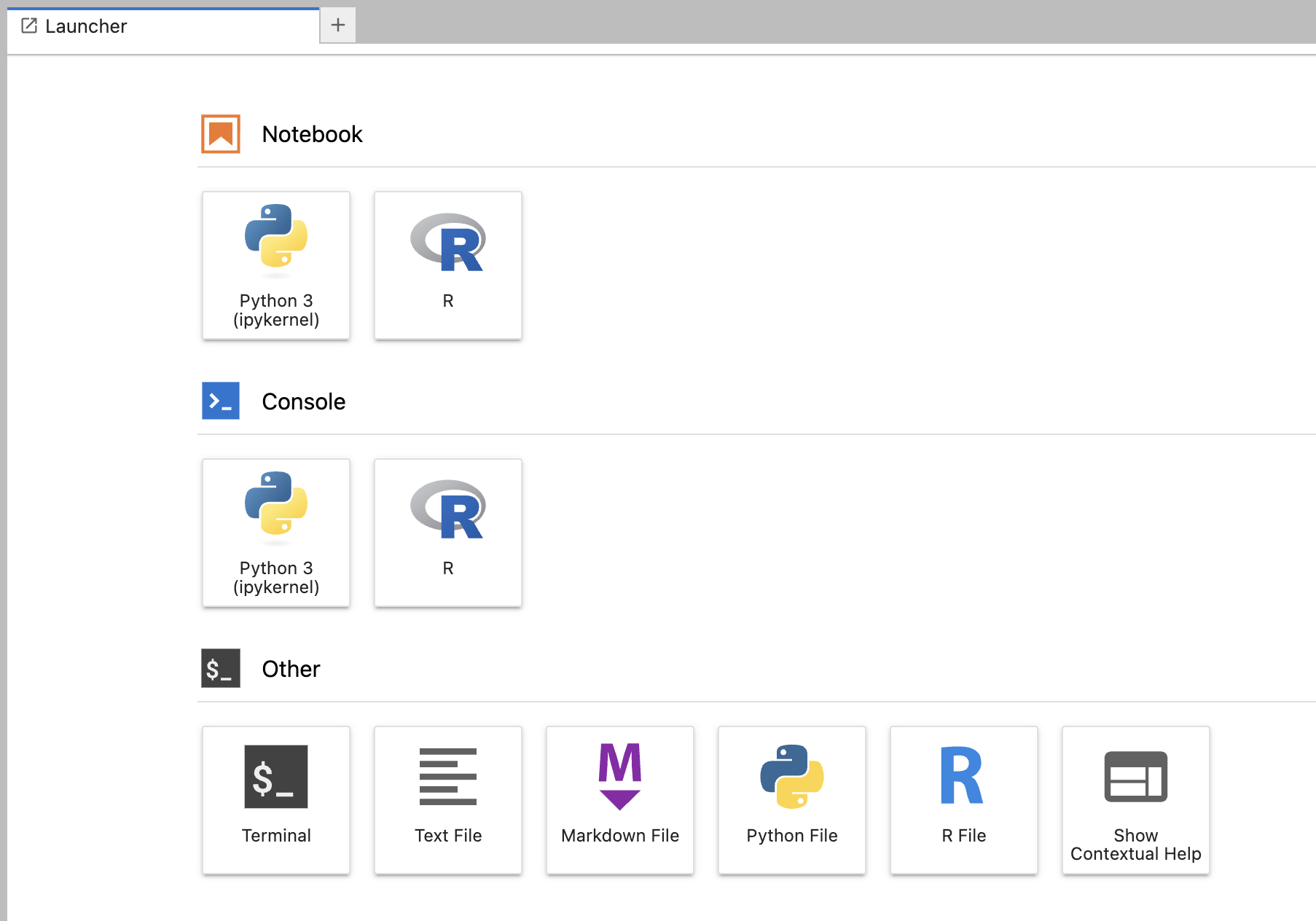

To start we will open a terminal.

- Go to the link given to you at the workshop

- Paste the notebook link next to your name into your browser

- Select “Terminal” from the “JupyterLab” launcher (or blue button with a plus in the upper left corner)

- After you have done this put up a green sticky not if you see a flashing box next to a

$

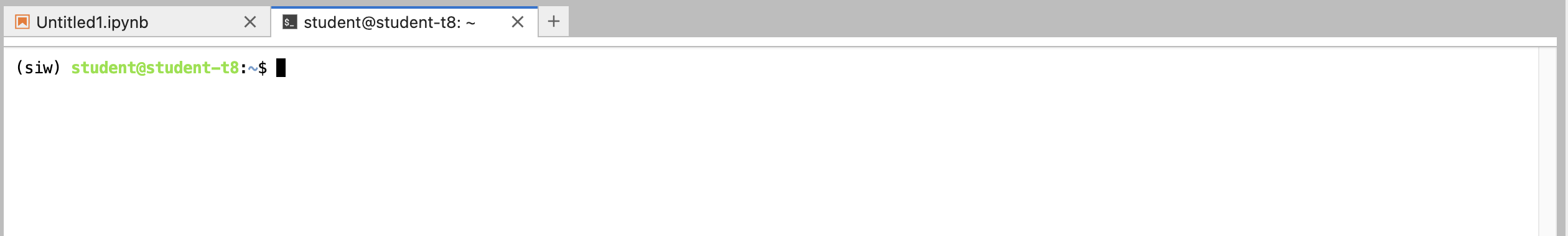

What am I seeing: when the shell is first opened, you are presented with a prompt, indicating that the shell is waiting for input.

$

The Shell

The shell is a program where users can type commands. With the shell, it’s possible to invoke complicated programs like climate modeling software or simple commands that create an empty directory with only one line of code. The most popular Unix shell is Bash (the Bourne Again SHell — so-called because it’s derived from a shell written by Stephen Bourne). Bash is the default shell on most modern implementations of Unix and in most packages that provide Unix-like tools for Windows. Note that ‘Git Bash’ is a piece of software that enables Windows users to use a Bash like interface when interacting with Git.

Using the shell will take some effort and some time to learn. While a GUI presents you with choices to select, CLI choices are not automatically presented to you, so you must learn a few commands like new vocabulary in a language you’re studying. However, unlike a spoken language, a small number of “words” (i.e. commands) gets you a long way, and we’ll cover those essential few today.

The grammar of a shell allows you to combine existing tools into powerful pipelines and handle large volumes of data automatically. Sequences of commands can be written into a script, improving the reproducibility of workflows.

In addition, the command line is often the easiest way to interact with remote machines and supercomputers. Familiarity with the shell is near essential to run a variety of specialized tools and resources including high-performance computing systems. As clusters and cloud computing systems become more popular for scientific data crunching, being able to interact with the shell is becoming a necessary skill. We can build on the command-line skills covered here to tackle a wide range of scientific questions and computational challenges.

The Prompt

The shell typically uses $ as the prompt, but may use a different symbol.

In the examples for this lesson, we’ll show the prompt as $.

Most importantly, do not type the prompt when typing commands.

Only type the command that follows the prompt.

This rule applies both in these lessons and in lessons from other sources.

Also note that after you type a command, you have to press the Enter key to execute it.

The prompt is followed by a text cursor, a character that indicates the position where your typing will appear. The cursor is usually a flashing or solid block, but it can also be an underscore or a pipe. You may have seen it in a text editor program, for example.

Note that your prompt might look a little different. In particular, most popular shell

environments by default put your user name and the host name before the $. Such

a prompt might look like, e.g.:

student@workshop-1:~$

Read Evaluate Print Loop

There are many ways for a user to interact with a computer. For example, we often use a Graphical User Interface (GUI). With a GUI we might roll a mouse to the logo of a folder and click or tap (on a touch screen) to show the content of that folder. In a Commandline Interface the user can do all of the same actions (e.g. show the content of a folder). On the Commandline the user passes commands to the computer as lines of text. Below are the steps in a Read Evaluate Print Loop (REPL):

- the shell presents a prompt (like

$) - user types a command and presses the Enter key

- the computer reads it

- the computer executes it and prints its output (if any)

- loop from step #4 back to step #1

Reasons to learn about the shell

- Many bioinformatics tools can only process large data in the command line version not the GUI.

- The shell makes your work less boring (same set of tasks with a large number of files)

- The shell makes your work less error-prone

- The shell makes your work more reproducible.

- Many bioinformatic tasks require large amounts of computing power

Let’s call some programs

The most basic command is to call a program to perform its default action. For example, call the program

whoamito return your username.whoamiYou can also call a program and pass arguments to the program, for example this command to find which shell we are using:

echo $SHELL

Glance at the Filesystem

The ls command will list the contents of

your current directory (directory is synonymous with folder). Any line that starts with # will not be executed. We can write comments to ourselves by starting the line with #.

# call ls to list current directory

ls

# pass one or more paths of files or directories as argument(s)

ls /home/student/

In a GUI you may customize your finder/file browser based on how you like to search. In general if you can do it on a GUI there is a way to use text to do it on the commandline. I like to see my most recently changed files first. I also like to see the date they were edited.

# call ls to list bin and show the most recently changed files first (with the `-t` option/flag)

ls -t /home/student/

# add the `-l` to show who owns the file, file size, and what date is was last edited

ls -t -l /home/student/

# a flags to distinguish Folders from files (`-F`) and to show "human readable" filesizes (`-h`)

ls -t -l -F -h /home/student/

# combine short flags for faster typing

ls -lthF /home/student/

The basic syntax of a unix command is:

- call the program

- pass any flags/options

- pass any “order dependent arguments”

Getting help

ls has lots of other options. There are common ways to find out how to use a command and what options it

accepts (depending on your environment). Today we will call the unix command man and pass the name of the program that we want a manual for as the argument for man.

$ man ls

Usage: ls [OPTION]... [FILE]...

List information about the FILEs (the current directory by default).

Sort entries alphabetically if none of -cftuvSUX nor --sort is specified.

Mandatory arguments to long options are mandatory for short options too.

-a, --all do not ignore entries starting with .

-A, --almost-all do not list implied . and ..

--author with -l, print the author of each file

-b, --escape print C-style escapes for nongraphic characters

Help menus show you the basic syntax of the command. Optional elements are shown in square brackets. Ellipses indicate that you can type more than one of the elements.

Help menus show both the long and short version of the flags. Use the short option when typing commands directly into the shell to minimize keystrokes and get your task done faster. Use the long option in scripts to provide clarity. It will be read many times and typed once.

When

mandoes not workIf

man PROGRAMdoes not show you a help menu there are other common ways to show help menus that you can try.

- call the program with only the

--helpflag Typeqto exit out of this help screen.- some bioinformatics programs will show a help menu if you call the tool without any flags or arguments (e.g.

samtools).

Command not found

If the shell can’t find a program whose name is the command you typed, it will print an error message such as:

$ ksSolution

ks: command not foundThis might happen if the command was mis-typed or if the program corresponding to that command is not installed. When your get an error message stay calm and give it a couple of read throughs. Error messages can seem akwardly worded at first but they can really help guide your debugging.

The Cloud

There are a number of reasons why accessing a remote machine is invaluable to any scientists working with large datasets. In the early history of computing, working on a remote machine was standard practice - computers were bulky and expensive. Today we work on laptops or desktops that are more powerful than the sum of the world’s computing capacity 20 years ago, but many analyses (especially in genomics) are too large to run on these laptops/desktops. These analyses require larger machines, often several of them linked together, where remote access is the only practical solution.

Schools and research organizations often link many computers into one High Performance Computing (HPC) cluster on or near the campus. Another model that is becoming common is to “rent” space on a cluster(s) owned by a large company (Amazon, Google, Microsoft, etc). In recent years, computational power has become a commodity and entire companies have been built around a business model that allows you to “rent” one or more linked computers for as long as you require, at lower cost than owning the cluster (depending on how often it is used vs idle, etc). This is the basic principle behind the cloud. You define your computational requirements and off you go.

The cloud is a part of our everyday life (e.g. using Amazon, Google, Netflix, or an ATM involves remote computing). The topic is fascinating, but this lesson says a few minutes or less so let’s get back to working on it for the workshop.

For this workshop starting a vm and setting up your working environment has been done for you. Going forward reach out to your organizations system administrators for your cluster for suggestions. To read more on your own here are lessons about working on the cloud and a local HPC. Additional lesson’s that you can run from a remote computer:

HUMAN GENOMIC DATA & SECURITY

Note that if you are working with human genomics data there might be ethical and legal considerations that affect your choice of cloud resources to use. The terms of use, and/or the legislation under which you are handling the genomic data, might impose heightened information security measures for the computing environment in which you intend to process it. This is a too broad topic to discuss in detail here, but in general terms you should think through the technical and procedural measures needed to ensure that the confidentiality and integrity of the human data you work with is not breached. If there are laws that govern these issues in the jurisdiction in which you work, be sure that the cloud service provider you use can certify that they support the necessary measures. Also note that there might exist restrictions for use of cloud service providers that operate in other jurisdictions than your own, either by how the data was consented by the research subjects or by the jurisdiction under which you operate. Do consult the legal office of your institution for guidance when processing human genomic data.

Key Points

Many bioinformatics tools can only process large data in the command line version not the GUI.

The shell makes your work less boring (same set of tasks with a large number of files)”

The shell makes your work less error-prone

The shell makes your work more reproducible.

Many bioinformatic tasks require large amounts of computing power

Navigating and Editing Files

Overview

Teaching: 4h min

Exercises: 30m minQuestions

How can I move around on the computer/vm?

How can I see what files and directories I have?

How can I specify the location of a file or directory on the computer/vm?

How can I specify the location of a file or directory in a bucket?

Objectives

Translate an absolute path into a relative path and vice versa.

Construct absolute and relative paths that identify specific files and directories.

Use options and arguments to change the behaviour of a shell command.

Demonstrate the use of tab completion and explain its advantages.

The Filesystem

The part of the operating system responsible for managing files and directories is called the file system. It organizes our data into files, which hold information, and directories (also called ‘folders’), which hold files or other directories.

Several commands are frequently used to create, inspect, rename, and delete files and directories. To start exploring them, we’ll go to our open shell window.

First, let’s find out where we are by running a command called pwd (which stands for ‘print working directory’). Directories are like places — at any time while we are using the shell, we are in exactly one place called our current working directory. Commands mostly read and write files in the current working directory, i.e. ‘here’, so knowing where you are before running a command is important. pwd shows you where you are:

$ pwd

Here, the response may be different on different computers. Often a session begins in the users home directory.

To understand what a file system is, let’s have a look at how the file system as a whole is organized. For the sake of this example, we’ll be illustrating a portion of the file system on our workshop VM. After this illustration, you’ll be learning commands to explore your own filesystem, which will be constructed in a similar way, but not be exactly identical.

On the workshop VM, part of the filesystem looks like this:

$ tree -L 2 /data/RNA/

/data/RNA/

├── bulk

│ ├── airway_raw_counts.csv.gz

│ └── airway_sample_metadata.csv

└── single_cell

└── README

Another way to diagram the filesystem is like this. The root directory is always named /.

Relative Paths

/ : root directory

Absolute Path : a path that starts from the root of the file system. Any path that starts with / is an absolute path.

Relative Path : a path that starts from current location or any location other than the root.

. : current working directory

cd /data/alignment/references/

ls ./GRCh38_1000genomes/

.. : one level up directory, also known as the parent directory of the current directory

ls -F ../

ls -F ../combined

ls -a

~ : user’s home directory

ls -F ~/

cd : a command to change your current working directory

- : previous directory. The dash is interpreted as the last directory that the user was in.

cd -

Note: if no special characters are used UNIX assumes your path begins in the current working directory

Create a Relative Path

Without changing directories create a relative path to list the contents of the

/data/alignment/combineddirectory (showing a trailing slash to see which are directories). For the second part of the code challenge, what does the commandcdwithout a directory name do?$ cd /data/alignment/references/GRCh38_1000genomes/Solution

ls -F ../../combined/Second part: changes the current working directory to the home directory

Software on the File System the PATH Variable

Commands like ls and (on our VM) samtools seem to exist as special words that the user can type to call a single version of a program. However, these programs are actual files on the file system that we can call because they are in one of the many locations that the shell knows to search when a command is executed.

How can we run samtools when we don’t see any program named samtools in our current working directory?

Location of Samtools

# generate a samtools help menu

samtools

# show the absolute path to samtools

which samtools

VM path for samtools

/home/student/miniconda3/envs/siw/bin/samtools

Location of ls

# show the absolute path to ls

which ls

VM path for ls

/usr/bin/ls

you or a systems administrator will probably install some bioinformatics programs that researchers use commonly

In this workshop those have been installed at /home/student/miniconda3/envs/siw/bin using a environment manager called

conda. Ask your systems administrators to assist with software installation and/or tips

for installing tools.

What if we want to know the version of samtools?

samtools --version

samtools 1.20 Using htslib 1.20 Copyright (C) 2024 Genome Research Ltd. Samtools compilation details: Features: build=configure curses=yes CC: /opt/conda/conda-bld/samtools_1720645213030/_build_env/bin/x86_64-conda-linu ...

You may want to start with the most recent version of a tool or need to use a previous tool to match prior analysis runs. It can be useful to record the absolute path to bioinformatics tools in commands that you run for publication or intend to have to run again in a consistant fashion. It can also be useful to include the bioinformatics tool version in the path to the tool for clarity.

If you are just glancing at the alignment header to see what genome it was aligned to (e.g. GRCh38) then you don’t need to be so explicit.

$ samtools view -H /data/alignment/combined/NA12878.dedup.bam

... @RG ID:NA12878_TTGCCTAG-ACCACTTA_HCLHLDSXX_L001 PL:illumina PM:Unknown LB:NA12878 DS:GRCh38 SM:NA12878 CN:NYGenome PU:HCLHLDSXX.1.TTGCCTAG @RG ID:NA12878_TTGCCTAG-ACCACTTA_HCLHLDSXX_L002 PL:illumina PM:Unknown LB:NA12878 DS:GRCh38 SM:NA12878 CN:NYGenome PU:HCLHLDSXX.2.TTGCCTAG @RG ID:NA12878_TTGCCTAG-ACCACTTA_HCLHLDSXX_L003 PL:illumina PM:Unknown LB:NA12878 DS:GRCh38 SM:NA12878 CN:NYGenome PU:HCLHLDSXX.3.TTGCCTAG ...

How the Shell Finds Programs

The PATH environment variables defines the shell’s search path.

In the shell a variable is defined without a starting dollar sign but when the value

of the variable is retrived you add the $ begining of the variable name. Tips: also wrap the variable name in curly braces {} so that the shell can clearly see the last character that belongs to the variable name. There cannot be a space on either side of the = sign.

# define a variable

$ project_name="LUAD"

# retrieve the value of the variable

echo ${LUAD}

# use export to define the variable for the shell session and for any programs called during the session

$ export project_name="LUAD"

When you run a command like ls or samtools, the shell splits $PATH into components to get a list of directories.

Unix uses : as a separator. The shell looks for the program in each directory in left-to-right.

Then the shell runs the first program with that name that it finds.

which reported that samtools was in /home/student/miniconda3/envs/siw/bin/. This is the second directory listed in our $PATH.

$ echo $PATH

/home/student/bin:/home/student/miniconda3/envs/siw/bin:/home/student/miniconda3/condabin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/snap/bin:/software/manta-1.6.0.centos6_x86_64/bin:/home/student/paragraph-v2.4a/bin:/home/student/gatk-4.6.0.0

You can add a path for a tool that you need to your path. Make sure to also redefine the current $PATH variable as the last portion of the path. Otherwise you may lose the ability to run cd, ls, etc.

$ export PATH=/NEW_PATH/:$PATH

CLI typing hints

- Tab : autocompletes paths (use this for speed and to avoid mistakes !!)

- ↑/↓ arrow : moves through previous commands

- Ctrla : goes to the beginning of a line

- Ctrle: goes to the end of the line

- short flags generally

-followed by a single letter- long flags generally

--followed by a word- flags are often called options in manuals (both terms are correct)

- command/program will be used interchangeably (a whole line of code is also called a command)

- To list your past commands: Type

historyin the command line

Buckets (not covered in workshop)

On your computer files are often stored “locally” on that computer in a directory. On the cloud permanent storage areas are called a “bucket.” The console that we are using is running on an ephemeral virtual machine (VM). We will copy files to our vm or read them from the bucket to use them. Any file we create or modify in our vm will be deleted when we turn off the vm. If your lab is working on the cloud then users will use a bucket to save files needed for analysis after the vm is stopped.

On google cloud the program gcloud storage allows you to run ls and cp commands to search and transfer files between VMs and your buckets.

Example of a file in a bucket

List a file in a bucket:

gcloud storage ls gs://genomics-public-data/resources/broad/hg38/v0/wgs_calling_regions.hg38.interval_listCopy a file from a bucket to your current working directory.

gcloud storage cp gs://genomics-public-data/resources/broad/hg38/v0/wgs_calling_regions.hg38.interval_list .

Key Points

The file system is responsible for managing information on the disk.

Information is stored in files, which are stored in directories (folders).

Directories can also store other directories, which then form a directory tree.

The command

pwdprints the user’s current working directory.The command

ls [path]prints a listing of a specific file or directory;lson its own lists the current working directory.The command

cd [path]changes the current working directory.Most commands take options that begin with a single

-.Directory names in a path are separated with

/on Unix.Slash (

/) on its own is the root directory of the whole file system.An absolute path specifies a location from the root of the file system.

A relative path specifies a location starting from any location other than the root.

A

~indicates your home directoryA

-indicates the last directory that you were inDot (

.) on its own means ‘the current directory’;..means ‘the directory above the current one’.

Working With Files and Directories

Overview

Teaching: 31h min

Exercises: 20 minQuestions

How can I create, copy, and delete files and directories?

How can I edit files?

Objectives

Delete, copy and move specified files and/or directories.

Create files in that hierarchy using an editor or by copying and renaming existing files.

Creating directories

We now know how to explore files and directories, but how do we create them in the first place?

In this episode we will learn about creating and moving files and directories, using our home directory.

First we see where we are and what we already have. We want to be in the home directory on the Desktop, which we can check using:

pwd

# if needed we can change to the home directory with

cd

Let’s create a new directory called workshop using the command mkdir (which has no output)

mkdir : make directory

mkdir workshop

ls -F ~/

mkdir workshop/output/

mkdir workshop/logs/

mkdir workshop/scripts/

mkdir workshop/mock_data/

mkdir workshop/mock_data/fasta/

touch : create new, empty files

touch genome.fa

vi : a plain text editor that is already installed on many linux computers. This is not the most user-friendly plain text editor. A more user-friendly option is BBEdit. You may independtly install this on your laptop if you need an editor for future programming tasks. It is free and allows you edit custom scripts that are stored on a remote cluster.

vi genome.fa

In vi type i. Then type out a few FASTA records. Like the ones below.

When you are finished editing type esc, then type :wq follwed by return to save and quit.

>chr1

AACCGCGCGTACGCGCGCGCGC

CTCTACNNNNNN

>chr2

TGTGTCTGAAANTGTCATGTCA

NNNTCNTGTGTGTGAAAAAAAA

NNTGCNA

>chr3

AACCGCGCGTATCTGAACGCGC

CTCTAAATCGAT

cp : copy file(s) to a new location

# makes a copy with the same filename

cp ~/genomes.fa ~/workshop/mock_data/fasta/

# makes a copy with a new filename (both .fa and .fasta and .fna are valid FASTA file suffixes)

cp ~/genomes.fa ~/genomes.fasta

# see new file

ls -thl ~/genomes.fa ~/workshop/mock_data/fasta/

mv : move files or directories (if they are moved in the same location then they are renamed)

mv ~/genomes.fasta ~/workshop/mock_data/fasta/

mv ~/genomes.fa ~/new_name_genomes.fa

rm : remove file (CAUTION: no undelete here !!)

rm ~/new_name_genomes.fa

rmdir : remove empty directory

mkdir ~/workshop/mock_data/wrong

rmdir ~/workshop/mock_data/wrong

File permissions

chmod : command to set permissions of a file or directory

ls -thl ~/workshop/mock_data/fasta/genomes.fa

The command ls -thl lists the files in the current folder and displays them in the long listing format. While this may initially look complex, we can break this down in the following left to right order:

A set of ten permission flags

- Link count (which is irrelevant to this course)

- The owner of the file

- The associated group

- The size of the file

- The data that the file was last modified

- The name of the file

- The permission flags are the important thing we want to look at here. We can further break these down into the following three basic

Permission Types:

- Read - Which refers to a user’s capability to read the contents of the file.

- Write - Which refer to a user’s capability to write or modify a file or directory.

- Execute - Which affects a user’s capability to execute a file or view the contents of a directory.

Each of these permission types is listed in the _rwxrwxrwx section of the ls output. The first character marked by an underscore is the special permission flag that can vary. It shows things like whether the item is a directory.

The following set of three characters (rwx) is for the owner permissions.

The second set of three characters (rwx) is for the Group permissions.

The third set of three characters (rwx) is for the All Users permissions.

See all options at http://www.onlineconversion.com/html_chmod_calculator.html.

- The value

7is the file/directory can be read, written to and executed. - With a

5the file/directory can be read and executed (but not written to!). - With a

0the file/directory can’t be read, written to or executed.

Your bioinformatics sequence and reference files should be read only.

chmod -R 550 ~/workshop/mock_data/fasta

echo "oops" > ~/workshop/mock_data/fasta/genomes.fa

Tokens and key files work something like passwords and should be read only by you (with no permissions for your group and other users).

touch ~/workshop/logs/gdc_token_example.txt

chmod 500 ~/workshop/logs/gdc_token_example.txt

Key Points

Repeating yourself

Overview

Teaching: 30 min

Exercises: 20 minQuestions

How can I combine existing commands to produce a desired output?

How can I perform the same actions on many different files?

How can I save and re-use commands?

Objectives

Explain the advantage of linking commands with pipes and filters.

Redirect a command’s output to a file.

Write a loop that applies one or more commands separately to each file in a set of files.

Trace the values taken on by a loop variable during execution of the loop.

Explain the difference between a variable’s name and its value.

Write a shell script that runs a command or series of commands for a fixed set of files.

Run a shell script from the command line.

Pipes and Filters

Now that we know a few basic commands, we can finally look at the shell’s most powerful feature:

the ease with which it lets us combine existing programs in new ways.

We’ll start with the directory /data/alignment/references/GRCh38_1000genomes/ that contains GRCh38 reference files.

The .fa extension indicates that a files is in FASTA format, a simple text format that specifies the nucleic or amino acid sequences.

head : returns the first lines of a file (default number of lines is 10)

tail : returns the last lines of a file (default number of lines is 10)

$ cd /data/alignment/references/GRCh38_1000genomes/

$ head GRCh38_full_analysis_set_plus_decoy_hla.fa

$ tail GRCh38_full_analysis_set_plus_decoy_hla.fa

grep : program to find lines that match a pattern

^ : regex (regular expression) which matches the first character

| : pipes output from the command on the left as input to the command on the right

The vertical bar, |, between the two commands is called a pipe. It tells the shell that we want to use the output of the command on the left as the input to the command on the right. Nothing prevents us from chaining pipes consecutively. We can for example send the output of head directly to grep, and then send the resulting output to sort (a command that sorts lines of text). This removes the need for any intermediate files.

This idea of linking programs together is why Unix has been so successful. Instead of creating enormous programs that try to do many different things, Unix programmers focus on creating lots of simple tools that each do one job well, and that work well with each other. This programming model is called ‘pipes and filters’. We’ve already seen pipes; a filter is a program like wc or sort that transforms a stream of input into a stream of output. Almost all of the standard Unix tools can work this way. Unless told to do otherwise, they read from standard input, do something with what they’ve read, and write to standard output.

The key is that any program that reads lines of text from standard input and writes lines of text to standard output can be combined with every other program that behaves this way as well. You can and should write your programs this way so that you and other people can put those programs into pipes to multiply their power.

$ # returns lines that contain GRCh38

$ cat GRCh38_full_analysis_set_plus_decoy_hla.fa | grep "GRCh38"

$ # returns lines that start with (^) the > symbol

$ cat GRCh38_full_analysis_set_plus_decoy_hla.fa | grep "^>"

$ # counts lines that start with (^) the > symbol

$ cat GRCh38_full_analysis_set_plus_decoy_hla.fa | grep -c "^>"

* : glob (this translates to zero of more of any character)

$ ls /data/alignment/references/*/*fa

$ cat /data/alignment/references/*/*fa | grep -c "^>"

Writing to files

> : redirects output to a file

The greater than symbol, >, tells the shell to redirect the command’s output to a file instead of printing it to the screen. This command prints no screen output, because everything that wc would have printed has gone into the file lengths.txt instead. If the file doesn’t exist prior to issuing the command, the shell will create the file. If the file exists already, it will be silently overwritten, which may lead to data loss. Thus, redirect commands require caution.

$ cat /data/alignment/references/*/*fa | grep -c "^>" > ~/workshop/output/seq_counts.txt

$ cat ~/workshop/output/seq_counts.txt

>> : appends output to the end of a file

$ cat /data/alignment/references/*/*fa | grep -c "^>" >> ~/workshop/output/seq_counts.txt

$ cat ~/workshop/output/seq_counts.txt

Loops

Loops are a programming construct which allow us to repeat a command or set of commands for each item in a list. As such they are key to productivity improvements through automation. Similar to wildcards and tab completion, using loops also reduces the amount of typing required (and hence reduces the number of typing mistakes).

Suppose we have dozens of genome sequence alignment files in SAM, BAM or CRAM format. For this example, we’ll use the /data/*/*cram files which only have two example files, but the principles can be applied to many many more files at once.

The structure of these files is the same and that allows many tools (including QC tools to evaluate them). Let’s look at the files:

$ # the header describes the reference and read groups

$ samtools view -H /data/alignment/combined/NA12878.dedup.bam

$ # the first three alignments are printed to the screen to look at

$ samtools view /data/alignment/combined/NA12878.dedup.bam | head -3

We would like to get QC metrics for each alignment file. For each file, we would need to execute the command samtools flagstat. We’ll use a loop to solve this problem, but first let’s look at the general form of a loop, using the pseudo-code below:

# The word "for" indicates the start of a "For-loop" command

for thing in list_of_things

#The word "do" indicates the start of job execution list

do

# Indentation within the loop is not required, but aids legibility

operation_using/command $thing

# The word "done" indicates the end of a loop

done

and we can apply this to our example like this:

for cram in /data/*/*cram; do

echo ${cram}

echo "working..."

samtools flagstat ${cram}

echo "done"

done

Follow the Prompt

The shell prompt changes from

$to>and back again as we were typing in our loop. The second prompt,>, is different to remind us that we haven’t finished typing a complete command yet. A semicolon,;, can be used to separate two commands written on a single line.

When the shell sees the keyword for, it knows to repeat a command (or group of commands) once for each item in a list. Each time the loop runs (called an iteration), an item in the list is assigned in sequence to the variable, and the commands inside the loop are executed, before moving on to the next item in the list. Inside the loop, we call for the variable’s value by putting $ in front of it. The $ tells the shell interpreter to treat the variable as a variable name and substitute its value in its place, rather than treat it as text or an external command.

In this example, the list is three filenames: /data/cancer_genomics/COLO-829BL_1B.variantRegions.cram and /data/cancer_genomics/COLO-829_2B.variantRegions.cram. Each time the loop iterates, we first use echo to print the value that the variable ${cram} currently holds. This is not necessary for the result, but beneficial for us here to have an easier time to follow along. Next, we will run the flagstat command on the file currently referred to by ${cram}. The first time through the loop, ${cram} is COLO-829BL_1B.variantRegions.cram. The interpreter runs the command head on COLO-829BL_1B.variantRegions.cram and prints the QC metrics. For the second iteration, ${cram} becomes COLO-829_2B.variantRegions.cram. This time, the shell runs head on COLO-829_2B.variantRegions.cram and prints the QC metrics. Since the list was only two items, the shell exits the for loop.

Same Symbols, Different Meanings

Here we see

>being used as a shell prompt, whereas>is also used to redirect output. Similarly,$is used as a shell prompt, but, as we saw earlier, it is also used to ask the shell to get the value of a variable.If the shell prints

>or$then it expects you to type something, and the symbol is a prompt.If you type

>or$yourself, it is an instruction from you that the shell should redirect output or get the value of a variable.

When using variables it is good practice to put the names into curly braces to clearly delimit the variable name: $cram is equivalent to ${cram}, but is different from ${cr}am. You may find this notation in other people’s programs.

We have called the variable in this loop cram in order to make its purpose clearer to human readers. The shell itself doesn’t care what the variable is called; if we wrote this loop as:

for x in /data/*/*cram; do

echo ${x}

echo "working..."

samtools flagstat ${x}

echo "done"

done

It would work exactly the same way. Don’t do this. Programs are only useful if people can understand them, so meaningless names (like x) or misleading names (like temperature) increase the odds that the program won’t do what its readers think it does.

In the above examples, the variables (thing, filename, x and temperature) could have been given any other name, as long as it is meaningful to both the person writing the code and the person reading it.

Note also that loops can be used for other things than filenames, like a list of numbers or a subset of data.

Key Points

wc counts lines, words, and characters in its inputs (see SWC primer).

cat displays the contents of its inputs.

sort sorts its inputs (see SWC primer).

head displays the first 10 lines of its input by default without additional arguments.

tail displays the last 10 lines of its input by default without additional arguments.

command > [file] redirects a command’s output to a file (overwriting any existing content!!).

command >> [file] appends a command’s output to a file.

first | second is a pipeline. The output of the first command is used as the input to the second.

The best way to use the shell is to use pipes to combine simple single-purpose programs (filters)

A for loop repeats commands once for every thing in a list.

Every for loop needs a variable to refer to the thing it is currently operating on.

Use $name to expand a variable (i.e., get its value). ${name} can also be used.

Do not use spaces, quotes, or wildcard characters such as * or ? in filenames, as it complicates variable expansion.

Give files consistent names that are easy to match with wildcard patterns to make it easy to select them for looping.

Use the ↑/↓ keys to scroll through previous commands to edit and repeat them.

Use Ctrl+R to search through the previously entered commands (see SWC primer).

Use history to display recent commands, and !number to repeat a command by number (see SWC primer).

Save commands in files (usually called shell scripts) for re-use.

bash filename runs the commands saved in a file.

$@ refers to all of a shell script’s command-line arguments (see SWC primer).

$1, $2, etc., refer to the first command-line argument, the second command-line argument, etc.

Place variables in quotes if the values might have spaces in them.

Read Alignment and Small Variant Calling

Overview

Teaching: min

Exercises: minQuestions

Objectives

Intro to Alignment Lecture

https://drive.google.com/file/d/1HysQvr9pEj0DInCuF9oBqHCpzA2ZlUDT/view?usp=sharing

Getting Started

Log in to your instances through Jupyter notebooks and launch a terminal.

Next, find the places in the directories where you are going to work.

All of the data you will need for these exercises are in

/data/alignment

There are multiple subdirectories inside that directory that you will access for various parts of this session. Part of the aim of these exercises is for you to become more familiar with working with files and commands in addition to learning the processing steps, so for the most part there will not be full paths to the files you need, but all of them will be under the alignment directory.

You CAN put your output anywhere you want to. That’s also part of the workshop. I would recommend you use the following space:

/workshop/output

I would recommend making a directory under that for everything to keep it separate from what you did yesterday or will do in the following sessions. You could do something like

mkdir /workshop/output/alignment

However, if you think you will find it confusing to have “alignment” in both in the input and output directory paths, you could try something different like align_out or alnout or whatever you want. It’s only important that you remember it.

Part of this work will require accessing all the various data and feeding it into the programs you will run and directing your output. Certain steps will require that you be able to find the output of previous steps to use it for the input to the next steps. Keeping your work organized this way is a key part of running multi-step analysis pipelines. Yesterday we discussed absolute and relative paths. During this session you will need to keep track of where you are in the filesystem (pwd), how to access your input data from there, and how to access your output files and directories from there.

It is up to you if you want to do everything with absolute paths, with relative paths, or a mix. It is up to you if you want to cd to the output directory, the input directory, or a third place. Do whichever feels most comfortable to you. Note that if you choose to do everything with absolute paths, your working directory does not matter, but you will type more.

Tab completion is your friend. If there are only three things I want you to remember from this workshop, they are tab completion, tab completion, and tab completion.

Copy and Paste

Copy and paste is not your friend. It may be possible to copy and paste commands from this markdown into the Jupyter terminal. Please note that this more often than not fails in general because text in html or pdf or Word documents has non-printing characters or non-standard punctuation (like em dashes and directional quotation marks) which are not processed by the unix shell and will generate errors. The same is true with copying text that spans multiple lines. Because of this, most of the code blocks in this section are intentionally constructed to prevent copying and pasting. That is, the commands will be constructed, but full paths will not be given and real names of files will be substituted with placeholders. As a convention, if a name is in UPPERCASE ITALIC or as _UPPERCASE_, it is the placeholder for a filename or directory name you need to provide. If you copy and paste this, it will fail to work.

The exercises we will do

What we will do today is take some data from the Genome in a Bottle sample, also part of 1000 Genomes, NA12878, and this person’s parents, NA12891 and NA12892, and align them to the human genome. Aligning a whole human genome would take hours, and analyzing it even longer, so I have extracted a subset of reads that align to only chromosome 20, which is about 2% of the genome and will align in a reasonable amount of time. We will still align to the entire genome, because you should always align to the whole genome even if you think you have only targeted a piece of it because there are always off target reads, and using the whole genome for alignment improves alignment quality. We will walk through the following steps:

- Build an index for the bwa aligner (on a small part of the genome)

- Align reads to the genome with bwa mem

- Merge multiple alignment files and sort them in coordinate order (with gatk or samtools)

- Mark duplicate reads with gatk

- Call variants using gatk HaplotypeCaller (on a small section of chromosome 20)

- Filter variants using gatk VariantFiltration

We will also skip a couple of steps, indel realignment and base quality score recalibration (BQSR), but we will discuss them.

Along the way we will discuss the formatting and uses of several file formats:

- fasta

- fastq

- sam

- bam

- cram

- gatk dup metrics

- vcf

- gvcf

Running bwa

bwa is the Burrow-Wheeler Aligner. We’re going to use it to align short reads.

First we’re going to see an example of building the bwt index. Indexing an entire human genome would again take an hour or more, so we will just index chromosome 20. Look for it in the references subdirectory of /data/alignment and make a copy of it to your workspace using cp then index it with bwa. This should take about 1 minute.

cp /data/alignment/references/chr20.fa _MYCHR20COPY_

bwa index -a bwtsw _MYCHR20COPY_

The -a bwtsw tells bwa which indexing method to use. It also supports other indexing schemes that are faster for small genomes (up to a few megabases, like bacteria or most fungi) but do not scale to vertebrate sized genomes. You can see more detail about this by running bwa index with no other arguments, which produces the usage.

If you now look in the directory where you put MYCHR20COPY, you will now see a bunch of other files with the same base or root name as your chromosome fa, but with different extensions. When you use the aligner, you tell it the base file name of your fasta and it will find all the indices automatically.

Normally we do not want to make copies of files to do work, but in this case you had to because indexing requires creating new files and you do not have write permission to /data/alignment/references, so you cannot make the index files (try it if you want).

We will not actually use this index, so feel from to delete these index files or move them out of the way. This was just so you can see how that step works.

Now we will actually run bwa on the chr20 data, but against the whole reference genome, which is in

/data/alignment/references/GRCh38_1000genomes/GRCh38_full_analysis_set_plus_decoy_hla.fa

The reads from chromosome 20 are in

/data/alignment/chr20/NA12878.fq.dir/

less /data/alignment/references/GRCh38_1000genomes/GRCh38_full_analysis_set_plus_decoy_hla.fa

less /data/alignment/chr20/NA12878.fq.dir/NA12878_TTGCCTAG-ACCACTTA_HCLHLDSXX_L001_1.fastq

Now we will align the reads from one pair of fastqs to the reference. The command is below, but please read the rest of this section before you start running this.

bwa mem -K 100000000 -Y -t 8 -o _SAMFILE1_ _REFERENCE_ _READ1FASTQ_ _READ2FASTQ_

To break down that command line:

- bwa mem launches the bwa minimal exact match algorithm

- -K 100000000 tells bwa to process reads in chunks of 100M, regardless of the number of threads, which mainly forces it to produce deterministic output

- -Y says to use soft instead of hard clipping for supplementary alignments

- -t 8 tells it to use 8 threads, which maximally utilizes your 8 vCPU cloud instances

- -o SAMFILE1 tells it to put its output (in SAM format) in SAMFILE1, which by convention ends in “.sam”

- READ1FASTQ and READ2FASTQ are the paired fastq files

By default, bwa sends output to stdout (which is your screen, unless you redirected it), which is not very useful by itself, but allows you to pipe it into another program directly so that you do not have write an intermediate alignment file. In our case, we want to be able to look at and manipulate that intermediate file, but it can be more efficient not to write all that data to disk just to read it back in and throw the sam file away later.

bwa can take either unpaired reads, in which there is only one read file argument, or paired reads, in which case there are two as here, or if you have interleaved fastq (with read 1s and read 2s alternating in the same file), it will take one fastq, with the -p option. Note that the fastqs come after the reference because there is always only one reference but could be one or two fastqs.

When you pick the fastqs, pick a pair that have the same name except for _1.fastq and _2.fastq at the end.

There is another option you can add. We do not strictly need it here, but you would want to do this in a production environment, and files you get from a sequencing center already aligned ought to have this done. This is to add read group information to the sam. Read groups are collection of reads that saw exactly the sam lab process (extaction, library construction, sequencing flow cell and lane) and thus should share the same probabilities of sequencing errors or artifacts. Labeling these during alignment allows us to keep track of them downstream if we want to do read group specific processing. The argument to bwa mem is -R RG_INFO and looks like this:

-R '@RG\tID:NA12878_TTGCCTAG-ACCACTTA_HCLHLDSXX_L001\tPL:illumina\tPM:Unknown\tLB:NA12878\tDS:GRCh38\tSM:NA12878\tCN:NYGenome\tPU:HCLHLDSXX.1.TTGCCTAG-ACCACTTA'

If you want to format this for your reads, you can reconstruct the information from the filename.

- ID is the name of the read group as is the fastq filename up to but not including the _1/_2.fastq

- PL is illumina

- PM is Unknown

- LB is NA12878

- DS is GRCh38

- SM is NA12878

- CN is NYGenome

- PU is the ID with NA12878_ chopped off (it’s formatted slightly differently above, but for our purposes, it’s the same information)

Adding the read groups is obviously a lot of effort and also error prone (I wrote a perl script to launch all the jobs and format this argument), so if you do not want to do it, you can skip it.

The bwa command should run for about 3 minutes. Once you finish one set, please pick a different set of fastqs and align those to the same reference with a different output file. You will need at least 2 aligned sam files for the next steps. Your output sam file should be about 700Mb (for each run you do, but will vary slightly by read group). If it is much smaller than this or the program ran for much less time, you have done something wrong.

We will stop here and take a look at each of these file formats (fasta, fastq, sam, bam, cram).

Intro to Variant Calling

https://drive.google.com/file/d/1hVpzIwBL1HeLyupUwIhtHxn349veBWoX/view?usp=sharing

Merging and Sorting

As you noticed, you now have multiple sam files. This is typical that we do one alignment for each lane of sequencing we do, and we often do many lanes of sequencing because we pool many samples and run them across many flow cells. They are also in sam file format, which is bulky, and we want bam instead. Lastly, they are not all sorted in coordinate order, which is needed in order to index the files for easy use.

Thus, we want to merge and sort these files. There are two programs that will allow us to do this. One is samtools. Samtools is written in C using htslib, a C library for interacting with sam/bam/cram format data. It has the advantage of being fairly easy to use on the command line, running efficiently in C, and allowing data to be piped in and out. It has the disadvantage of generally having less functionality than gatk, requiring more (but simpler) steps to do something, and occasionally generating sam/bam files that are mildly non-compliant with the spec. The other is GATK (the Genome Analysis ToolKit), written in Java using htsjdk. GATK has the advantage of allowing you do multiple steps in one go (as you may see here), offering many different functions, and being more generally compliant with specifications and interoperable with other tools. It has the drawbacks of long and convoluted command lines that do not easily support piping data (although with version 4 they have abstracted the interface to the JVM, which helps a lot with the complex usage).

First, we will look at how to do this with GATK:

gatk MergeSamFiles [-I _INPUTSAM_]... -O _MERGEDBAM_ -SO coordinate --CREATE_INDEX true

Note the [-I INPUTSAM]… portion. Because GATK does not know how many sam/bam files you want to merge in advance, it makes you specify each one with the -I argument. This means you either need to tediously type this out by hand (a good time to perhaps consider cd to the directory with the sam files) or programmatically assemble the command line using a script outside the unix command line.

If you are behind on the previous step or want to make a bigger bam file, all the aligned sams can be found in

/data/alignment/chr20/NA12878.aln

They differ slightly in the flow cell names and the lane numbers. Tab completion will be your friend typing these out.

At the end of this, we will get one bam with all the sams merged, sorted in genomic coordinate order, and then indexed (the .bai file next to the merged bam. The -SO coordinate argument to gatk tells it that in addition to whatever else it is doing, it should sort the reads in coordinate order before spitting them out. The –CREATE_INDEX true tells it to make an index for the new bam.

If you run this with all 12 sams, it should take 3-4 minutes (not counting the time to type the command).

We can also do this using samtools, but it takes multiple steps. We have to merge first, then sort, the index.

samtools merge _MERGEDBAM_ _INPUTSAM_...

Note that the syntax is a lot simpler. Also, because all the input files go at the end without any option/argument flags, you can use a glob (*) to send all the sam files in a directory without typing them out (e.g., /data/alignment/chr20/NA12878.aln/NA12878*sam), which is a lot easier to type. However, you will note there is no index, and if you looked inside, the reads not sorted. So we still need to do:

samtools sort -o _SORTEDBAM_ _INPUTBAM_

samtools index _SORTEDBAM_

Note that the -o is back. samtools sort again spits the output to stdout if you don’t say otherwise. The index command does not take an output because it just creates the index using the base name of the input. It does, however, use the extension .bam.bai instead of just .bai. Both are valid.

The whole samtools thing takes about the same time as GATK’s one action. In my test it also made a sorted bam that was about 80% the size of GATK’s, although both programs offer different levels of compression and the defaults may be different.

We will stop here and take a look at each of the file formats we have used so far (fasta, fastq, sam, bam, cram).

Intro to Variant Calling

https://drive.google.com/file/d/1hVpzIwBL1HeLyupUwIhtHxn349veBWoX/view?usp=sharing

Marking Duplicates

Sometimes you get identical read pairs (or single end reads with identical alignments, but those are more likely to occur by random chance than identical pairs). These are usually assumed to be the result of artifical duplication during the sequencing process, and we want to mark all but one copy as duplicates so they can be ignored for downstream analyses. Again, both GATK and samtools do this, but even the author of samtools says that GATK’s marker is superior and you should not use samtools. The GATK argument looks like this:

gatk MarkDuplicates -I _INPUTBAM_ -O _DEDUPBAM_ -M _METRICS_ --CREATE_INDEX true

We give it the name of input and the name of our output. We also have to give a metrics file. This is a text file and typically get the extension .txt. It contains informtion about the duplicate marking that can be useful. We use –CREATE_INDEX because we will get a new bam with the dups marked, but we do not need -SO because we are not reordering the bam.

If you are stuck up to this point, you can find a merged and sorted bam in /data/alignment/chr20/NA12878.aln.

We can take a look at the duplicate marked bam and the metrics file.

NOT Realigning Indels

Some of you may have heard of a process called indel realignment (although maybe not). This used to be a part of the GATK pipeline that would attempt to predict the presence of indels and adjust the alignments to prevent false SNVs from being called due to misalignment of reads with indels. The HaplotypeCaller now does this internally (but by default does not alter the bam), so we do not do it separately now. (It is also very time consuming.)

Not Running Base Quality Score Recalibration (BQSR)

There is much discussion about whether this still has value at all. Mainly if you are running a large project over several years, you may be forced to change sequencing platforms, and recalibration can help with compatibility. Otherwise, it is probably not worthwhile.

We will not run it for several reasons:

- These reads went through a pipeline which binned quality, which means the recalibration will be very coarse

- These reads have already gone through BQSR once. Generally, running a second time is not terrible, but in the limit, infinitely recalibrating will eventually collapse all bases to a single quality score

- It is unclear whether quality recalibration will remain relevant going forward

- It takes a long time

- The command lines are really tedious to type (yes, even compared to MergeSamFiles)

Calling Variants

We will call SNPs and indels using GATK’s HaplotypeCaller. HaplotypeCaller takes a long time to run, so we can’t even do all of chromosome 20 in a reasonable amount of time. Instead, we will run a small piece of chromosome 20, from 61,723,571-62,723,570 (yes there is a reason I picked that). There are two ways you can do this. You can extract that region from the bam using samtools

samtools view -b -o _SMALLBAM_ _DEDUPBAM_ chr20:61723571-62723570

You can then run HaplotypeCaller on the SMALLBAM.

Alternatively, you can run HaplotypeCaller on the entire chr 20 bam, but tell it to only look in that region.

gatk HaplotypeCaller -O _VCF_ -L chr20:61723571-62723570 -I _BAM_ -R /data/alignment/references/GRCh38_1000genomes/GRCh38_full_analysis_set_plus_decoy_hla.fa

Calling variants is only half the process. Now we need to filter them. We also do that with GATK. For a whole genome, we typically use Variant Quality Score Recalibration (VQSR), but there are not enough variants in the tiny region we called to build a model for that, so we will use the best practices filtering. It’s a long tedious command again:

gatk VariantFiltration -R /data/alignment/references/GRCh38_1000genomes/GRCh38_full_analysis_set_plus_decoy_hla.fa \

-O _FILTEREDVCF_ -V _INPUTVCF_ \

--filter-expression 'QD < 2.0' --filter-name QDfilter \

--filter-expression 'MQ < 40.0' --filter-name MQfilter \

--filter-expression 'FS > 60.0' --filter-name FSfilter \

--filter-expression 'SOR > 3.0' --filter-name SORfilter \

--filter-expression 'MQRankSum < -12.5' --filter-name MQRSfilter \

--filter-expression 'ReadPosRankSum < -8.0' --filter-name RPRSfilter

You can type the \ characters to spread this across several lines so it is easier to read.

Now we can look at the filtered VCF. If you did not get a filtered vcf, you can use one from /data/alignment/vcfs/NA12878.chr20.61.final.vcf

There is one last thing we will do if we have time, which is make a gvcf. When we do large projects, we typically do not call the samples one at a time. We preprocess each sample with haplotype caller than jointly call them using GenotypeGVCFs. We will not do that since we are only looking at one sample, but we will make the GVCF.

gatk HaplotypeCaller -O _GVCF_ -L chr20:61723571-62723570 -I _BAM_ -R /data/alignment/references/GRCh38_1000genomes/GRCh38_full_analysis_set_plus_decoy_hla.fa -ERC GVCF

We can take a look at the GVCF and then end here.

Feel free to go back and run any of these steps again with different programs or different inputs.

Key Points

Structural Variation in short reads

Overview

Teaching: 90 min

Exercises: 0 minQuestions

What is a structural variant?

Why is structural variantion important?

Objectives

Explain the difference between SNVs, INDELs, and SVs.

Explain the different types of SVs.

Explain the evidence we use to discover SVs.

Review

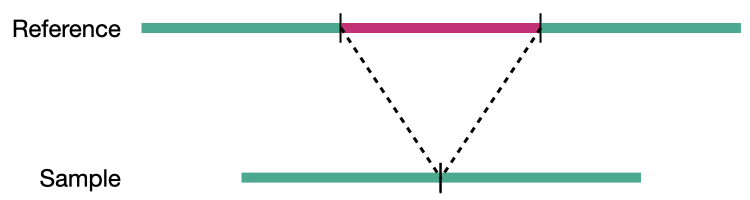

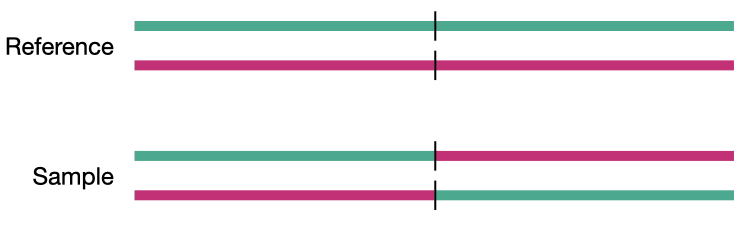

Simple read alignment

Simple InDel

What are structural variants

Structural variation is most typically defined as variation affecting larger fragments of the genome than SNVs and InDels; for our purposes those 50 base pairs or greater. This is an admittedly arbitrary definition, but it provides us a useful cutoff between InDels and SVs.

Importance of SVs

SVs affect an order of magnitude more bases in the human genome in comparison to SNVs (Pang et al, 2010) and are more likely to associate with disease.

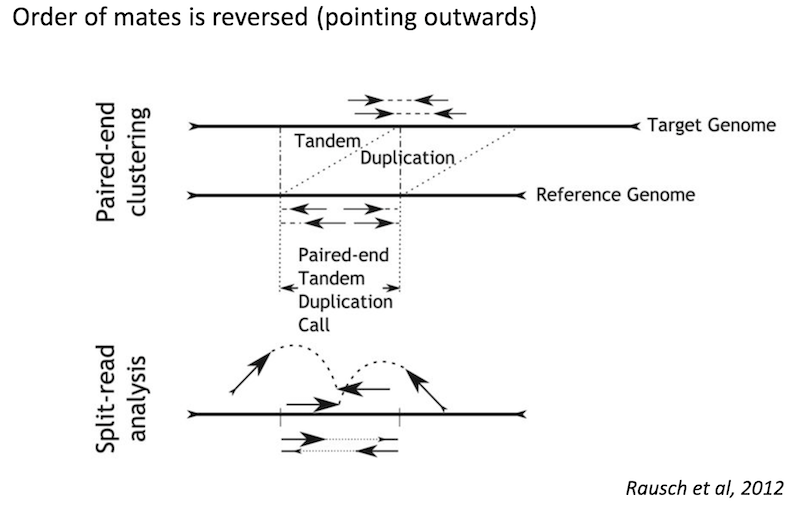

Structural variation encompases several classes of variants including deletions, insertions, duplications, inversions, translocations, and copy number variations (CNVs). CNVs are a subset of structural variations, specifically deletions and duplications, that affect large (>10kb) segments of the genome.

Breakpoints

The term breakpoint is used to denote a boundry between a structural variation and the reference.

Examples

Deletion

Insertion

Duplication

Inversion

Translocation

Detecting structural variants in short-read data

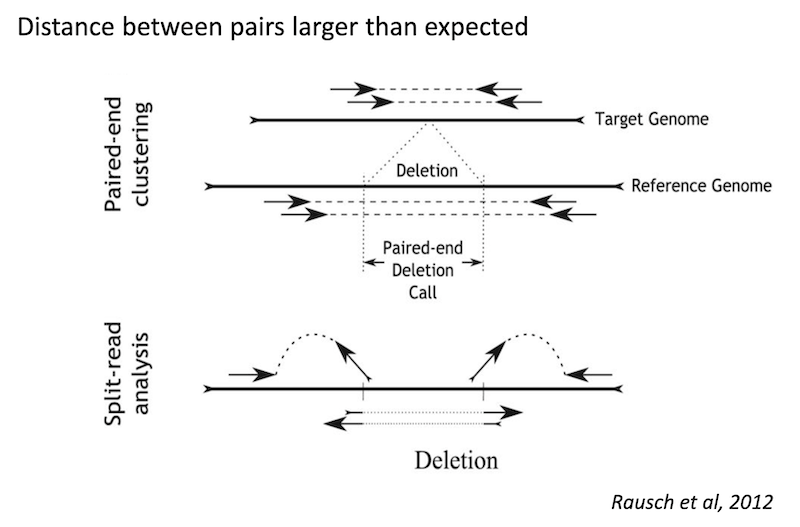

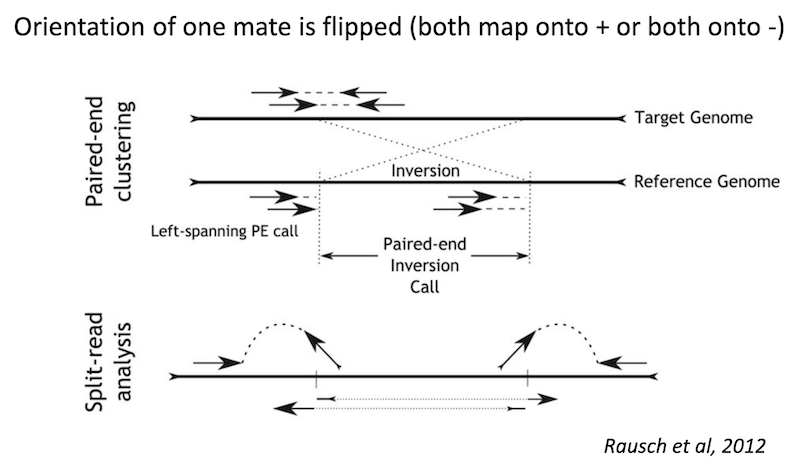

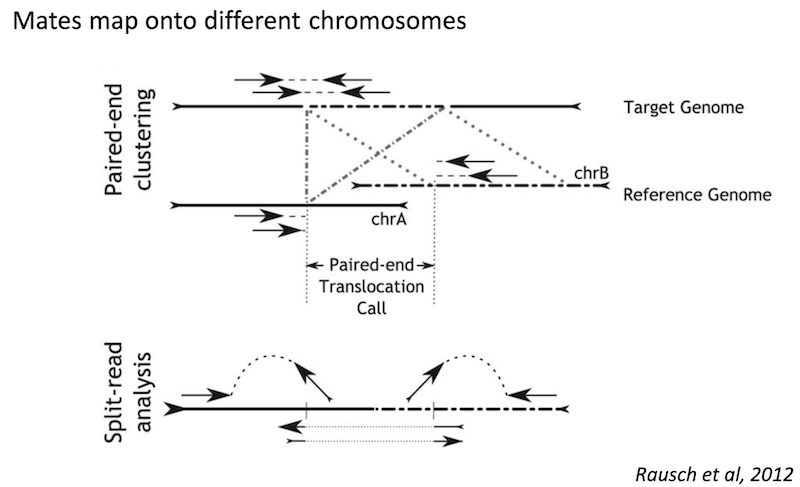

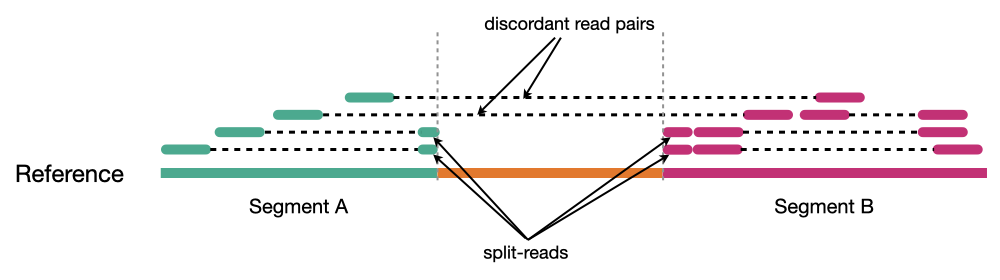

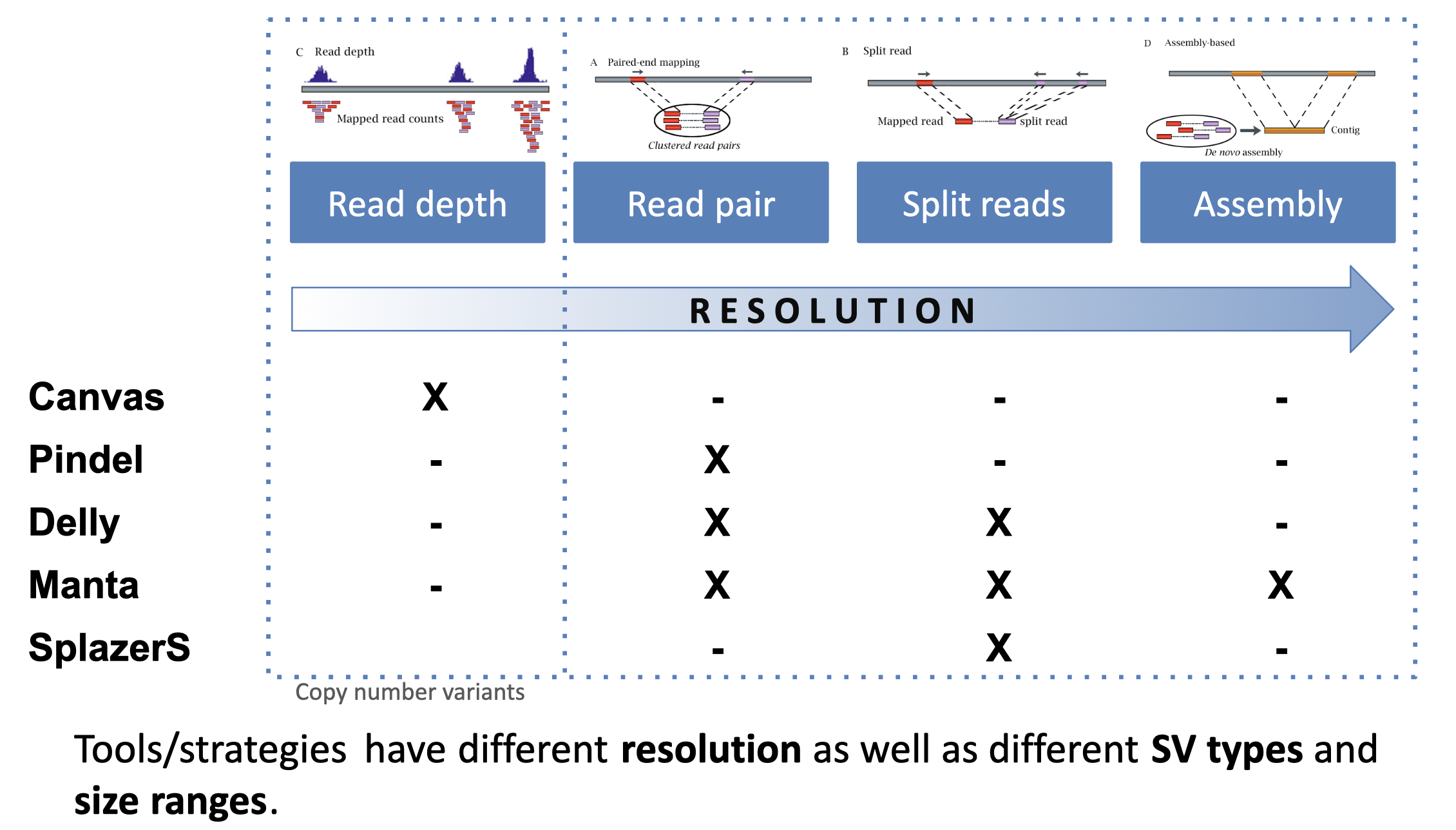

Because structural variants are most often larger than the individual reads we must use different types of read evidence than those used for SNVs and InDels which can be called by simple read alignment. We use three types of read evidence to discover structural variations: discordant read pairs, split-reads, and read depth.

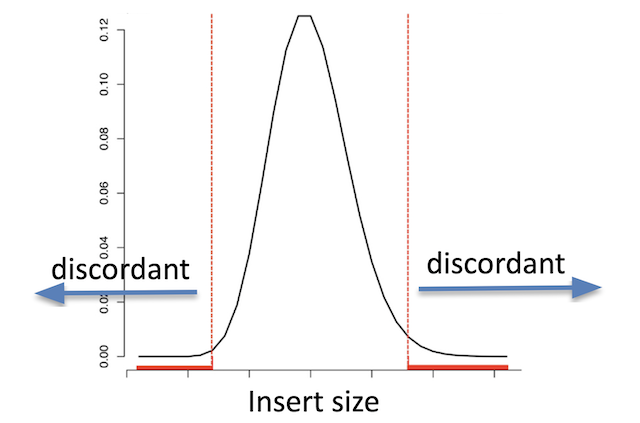

Discordant read pairs have insert sizes that fall significantly outside the normal distribution of insert sizes.

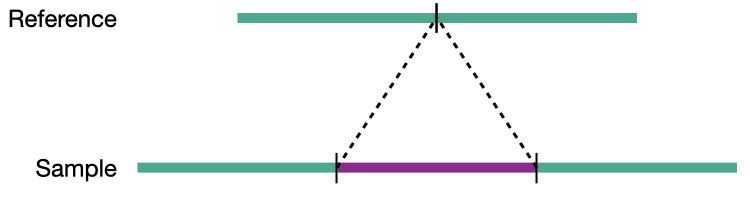

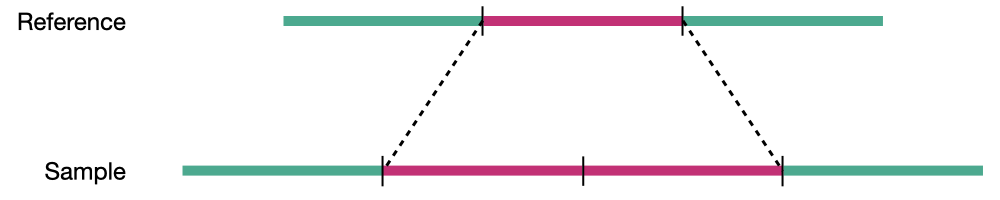

Insert size distribution

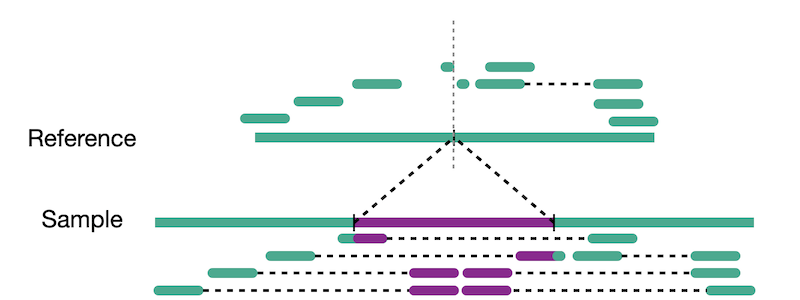

Split reads are those where part of the read aligns to the reference on one side of the breakpoint and the other part of the read aligns to the other side of the deletion breakpoint or to the inserted sequence. Read depth is where increases or decreases in read coverage occur versus the average read coverage of the genome.

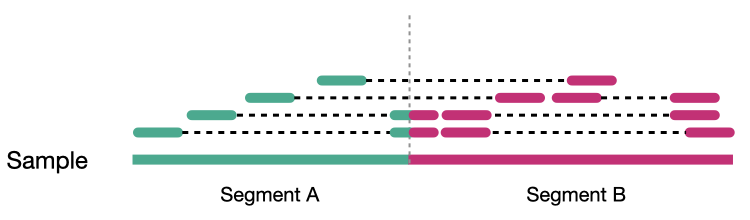

Reads aligned to sample genome

Reads aligned to reference genome

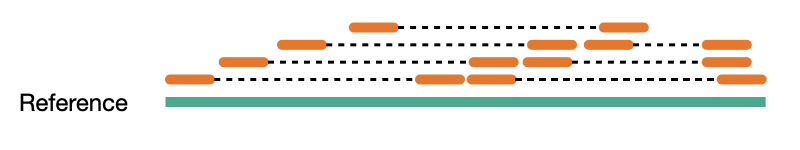

Coverage comes in two variants, sequence coverage and physical coverage. Sequence coverage is the number of times a base was read while physical coverage is the number of times a base was read or spanned by paired reads.

Sequence coverage

When there are no paired reads, sequence coverage equals the physical coverage. However, when paired reads are introduced the two coverage metrics can vary widely.

Physcial coverage

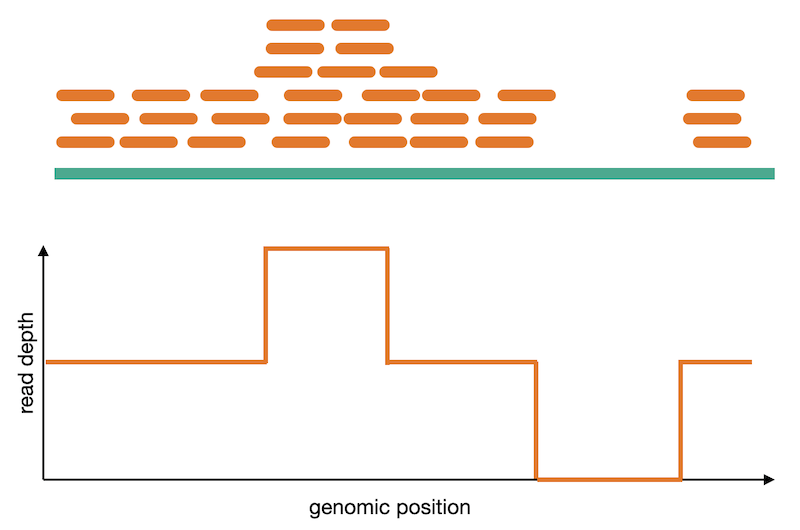

Read depth

Read signatures

Deletion read signature

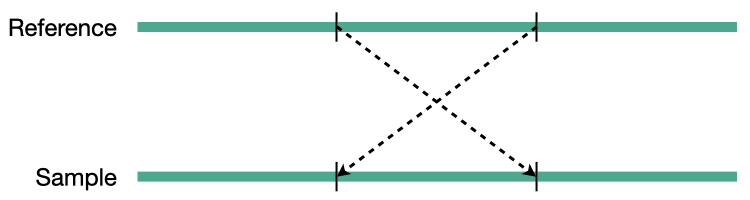

Inversion read signature

Tandem duplication read signature

Translocation read signature

Challenge

What do you think the read signature of an insertion might look like?

Solution

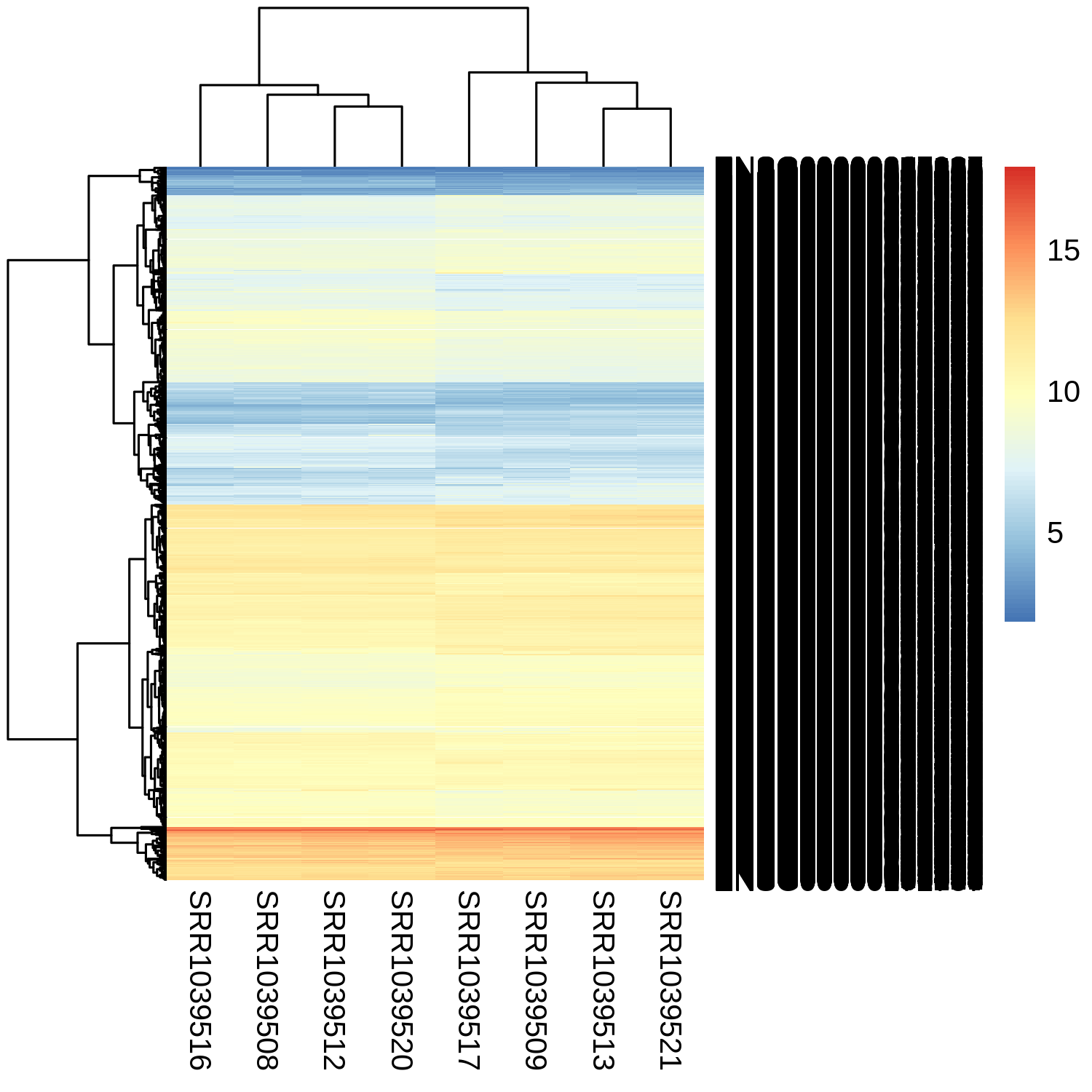

Copy number analysis

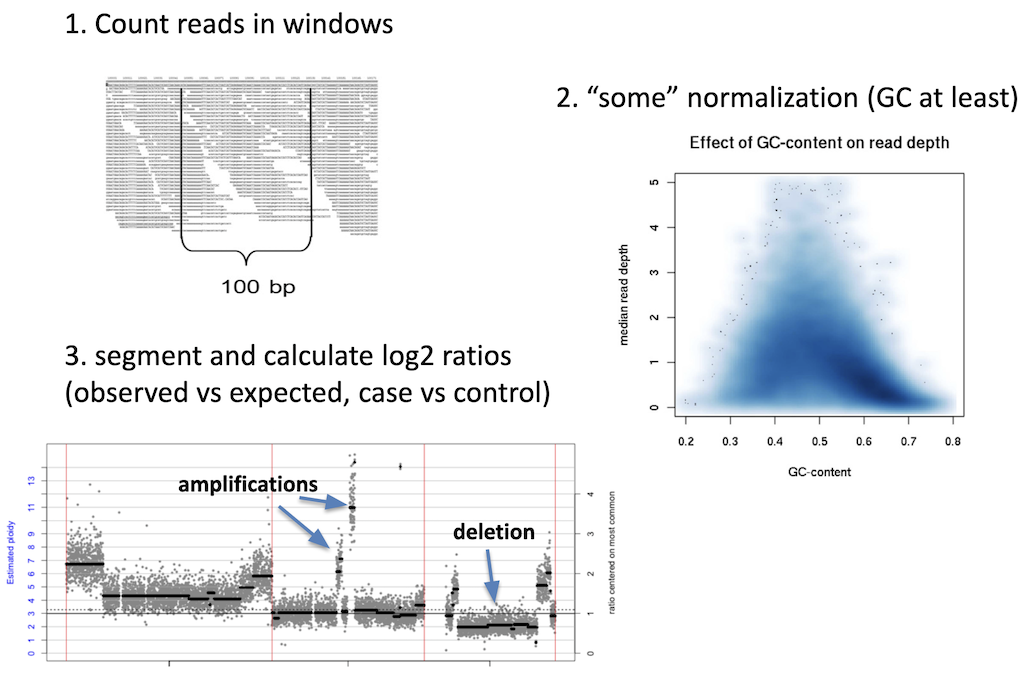

Calling of copy number variation from WGS data is done using read depth, where reads are counted in bins or windows across the entire genome. The counts need to have some normalization applied to them in order to account for sequencing irregularities such as mappability and GC content. These normalized counts can then be converted into their copy number equivalents using a process called segmentation. Read coverage is, however, inheirently noisy. It changes based on genomic regions, DNA quality, and other factors. This makes calling CNVs difficult and is why many CNV callers focus on large variants where it is easier to normalize away smaller confounding changes in read depth.

Caller resolution

We consider caller resolution to be how likely each algorithm is to determine the exact breakpoints of the SV. Precise location of SV breakpoints is an advantage when merging and regenotyping SVs. Here we are looking at the read signatures we’ve discussed so far: read depth, read pair, and split reads. We also see here another category which is assembly, which in this context means local assembly of the reads from the SV region is used to better determine the breakpoints of the SV.

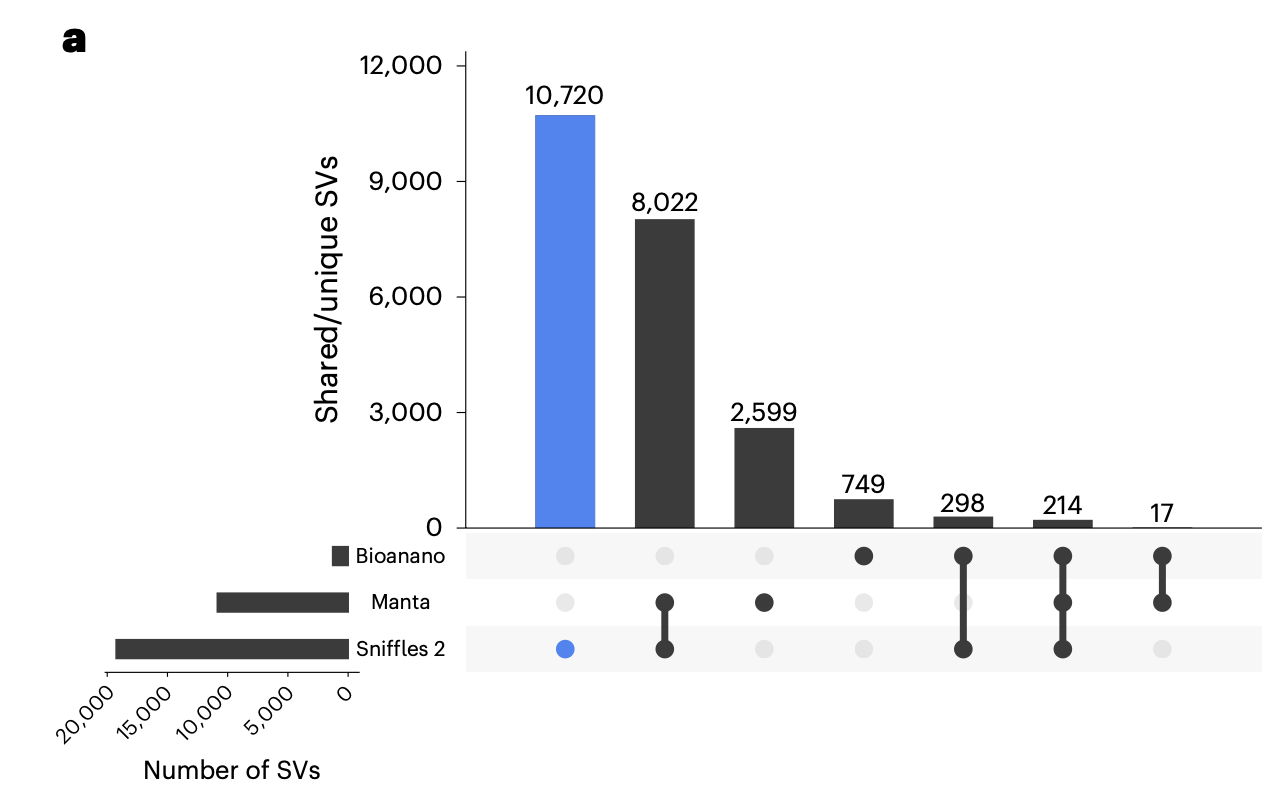

Caller concordance

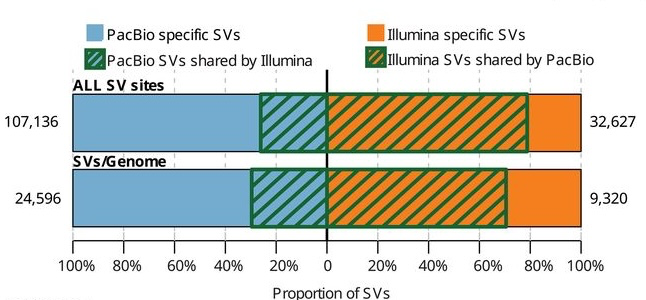

Because SV callers can both use different types of read evidence and apply different weights to the various read signatures, concordance between SV callers is usually quite low in comparison to SNV and InDel variant callers. Concordance between SV calls using different technologies show an even more pronounced lack of concordance.

Key Points

Structural variants are more difficult to identify.

Discovery of SVs usually requires multiple types of read evidence.

There is often significant disagreement between SV callers.

Structural Variation in long reads

Overview

Teaching: 30 min

Exercises: 0 minQuestions

What are the advantages/disadvantages of long reads?

How might we leverage a combination of long and short read data?

Objectives

Investigate how long-read data can improve SV calling.

Long read platforms

The two major platforms in long read sequencing are PacBio and Oxford Nanopore.

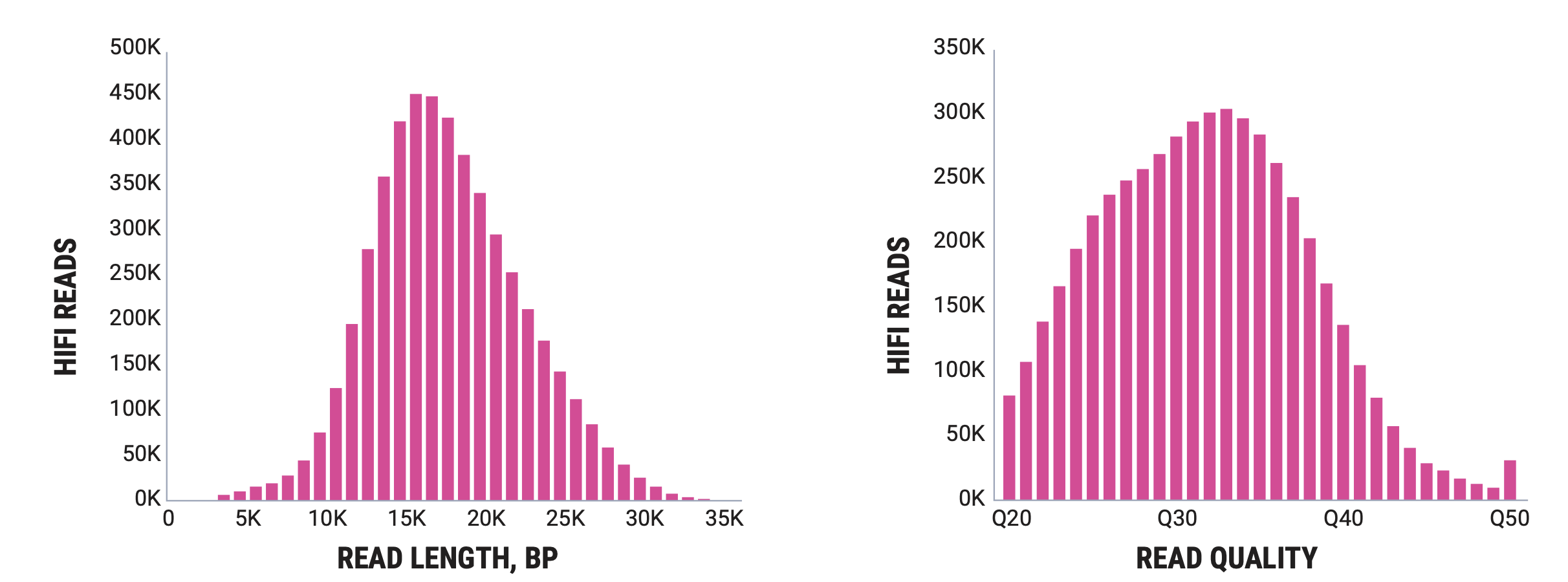

PacBio’s flagship is the Revio, which produces reads in the 5kb to 35kb range with very high accuracy.

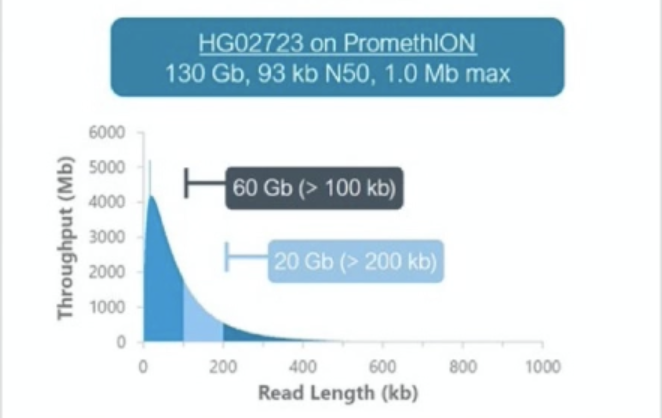

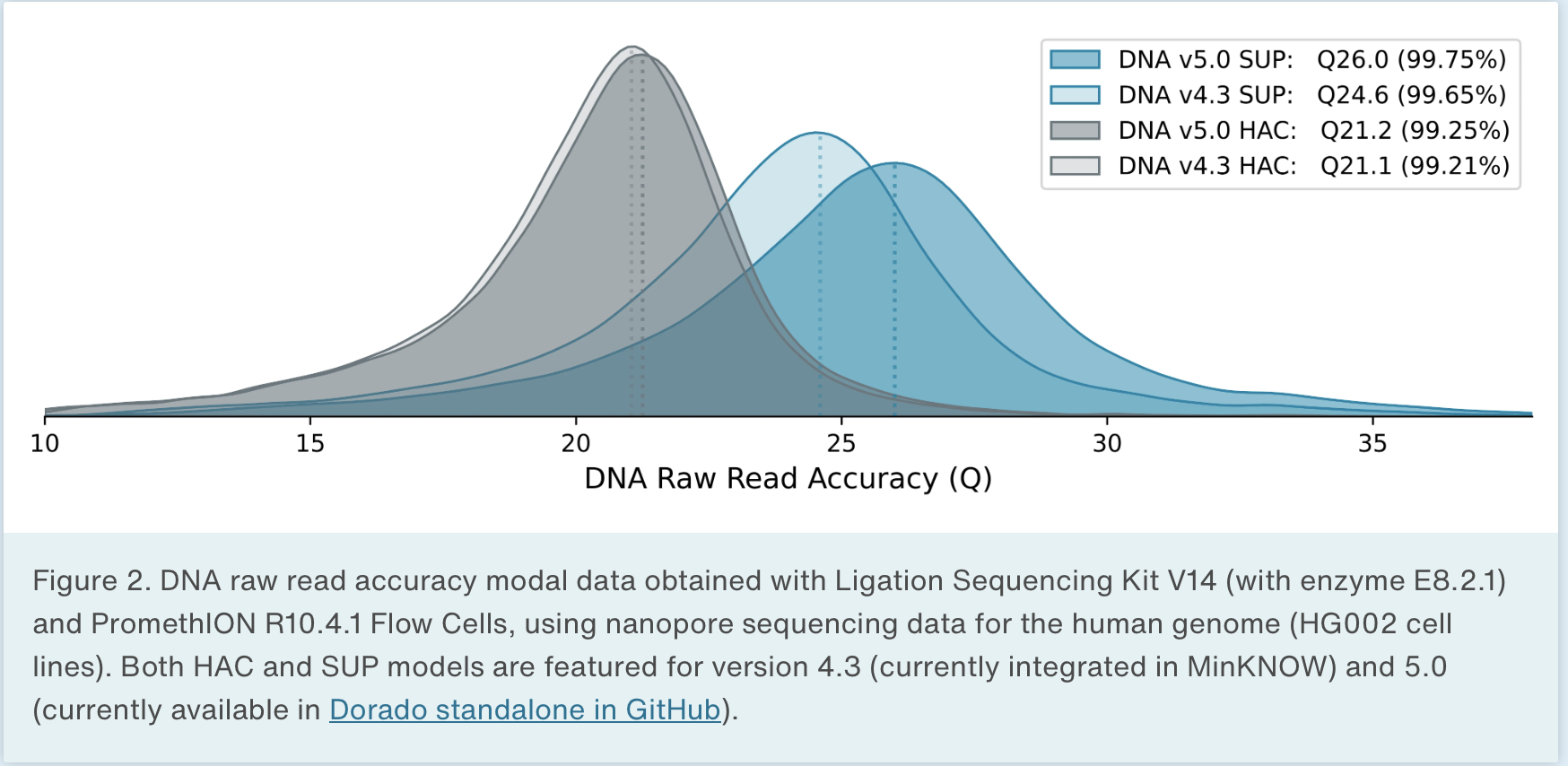

Oxford Nanopore produces sequencers that range in size from the MinION, which is roughly smart phone sized to the PromethION, the high throughput version that we have at NYGC. There are some differences in the read outputs of the various platforms but the MinION has been shown to produce N50 read lengths over 100kb with maximum read lengths greater than 800kb using ONT’s ultra-long sequencing prep. The PromethION can produce even greater N50 values and can produce megabase long reads. Typically these reads are lowe overall base quality than PacBio but ONT has steadily been improving the base quality for their data.

Advantages of long reads

We can call many more SVs using long reads, either by alignment (with enough depth) or assembly (with sufficient assembly quality)1.

Challenge

Why are we able to call more variants with long reads? Why are there Illumina only SVs?

Solution

- Long reads can often be mapped to regions of the genome where short reads fail to map.

Long reads can span larger events making discovery easier and increasing breakpoint accuracy.

- False positives are one possible explaination for Illumina only SVs.

SV calling in long reads

Alignment

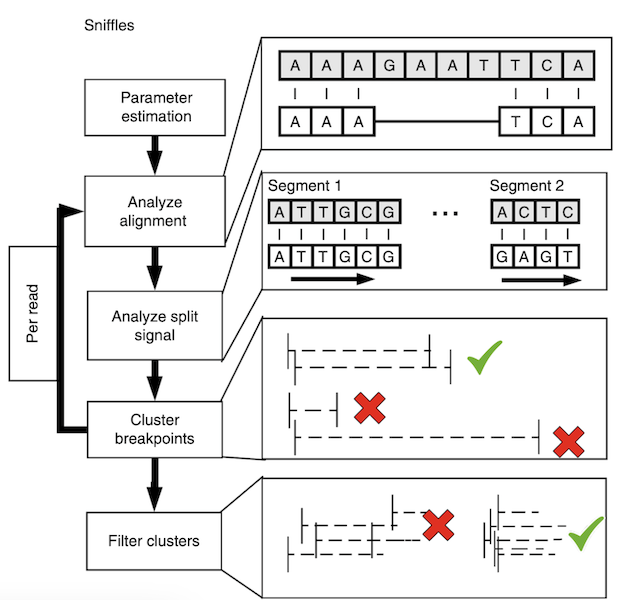

Sniffles uses a three step approach to calling SVs. First it scans the read alignments looking for split reads and inline events. Inline events are insertions and deletions that occur entirely within the read. It puts these SVs into bins and then looks for neighboring bins that can be merged using a repeat aware approach to create these clusters of SV candidates. Each cluster is then re-analyzed and a final determination is made based on read support, expected coverage changes and breakpoint variance.

Assembly

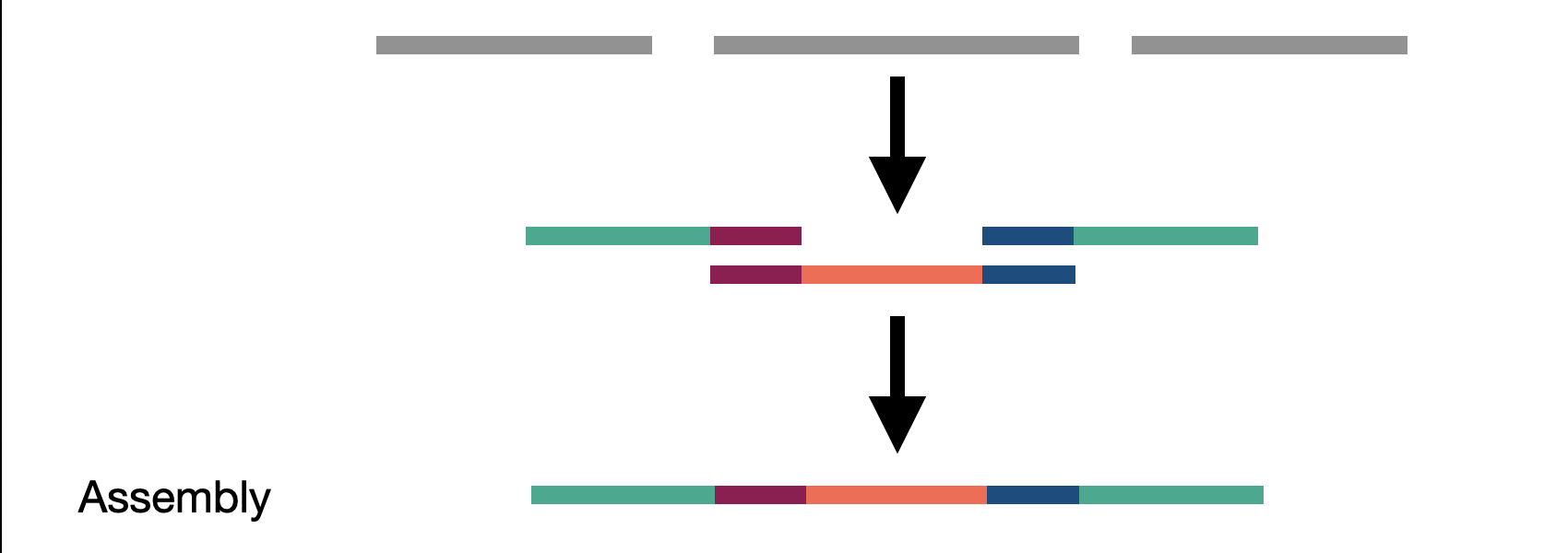

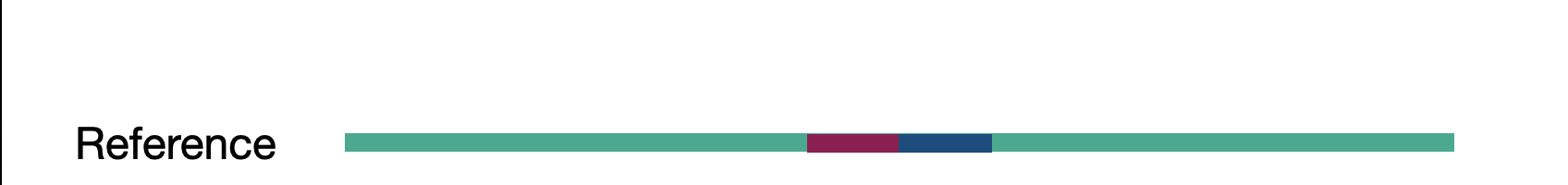

We touched on assembly in the short read section but here we actually refer to whole genome assembly compared to local assembly in short reads. By assembling as much of the genome as possible, including novel insertions, we create a bigger picture of our sample. These assembled fragments, called contigs, can then be aligned to the reference. The contigs act as a sort of ultra-long read as they represent many reads stiched together.

Challenge

Given the previous assembly result and reference sequence, what type of SV are we looking at here?

Drawbacks

- Long read sequencing is becoming more affordable but is still much more expensive than short read

sequencing

SR << LR Alignment << LR Assembly. - Throughput is lower, reducing the turn around time for projects with large numbers of samples.

- Sequencing prep, especially for ultra-long protocols is tedious and difficult to perform consitently.

- Due to cost, we typically don’t sequence samples at as high of depth.

- We are still limited to some extent by the length of our reads and our ablility to span an entire event with one or more reads and some regions of the genome are still very difficult to sequence and align to.

Genotyping LR SVs in SR data

Challenge

Given the section title, what two approaches might we take in creating a hybrid SV call set that uses both long and short reads?

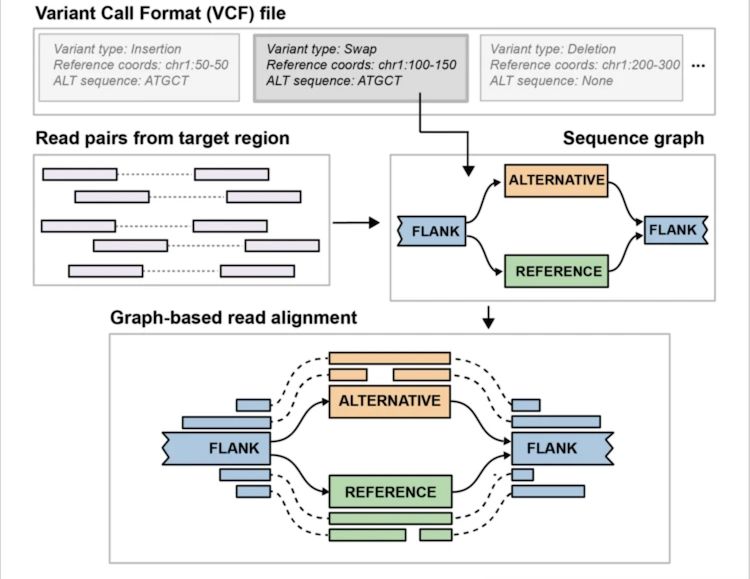

Paragraph

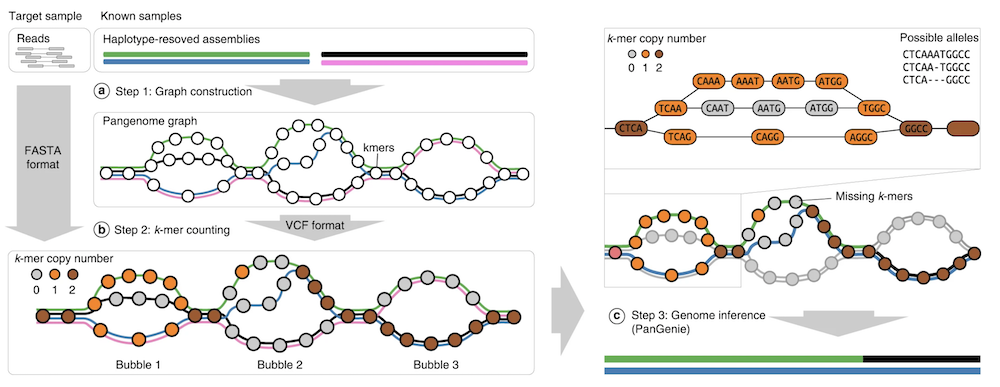

Pangenie

Solution

We can:

- Sequence a number of individuals with long reads and genotype those SV calls in our short read sample set.

- We can leverage existing long read SV callsets and genotype those SVs into out short read sample set.

Question

Does anyone know of any other technologies being used for structural variation?

Callback

Key Points

Long-reads offer significant advantages over short-reads for SV calling.

Genotyping of long-read discovered SVs in short-read data allows for some scalability.

SV Exercises

Overview

Teaching: 0 min

Exercises: 120 minQuestions

How do the calls from short read data compare to those from the long read data?

How do the

Objectives

Align long read data with minimap2

Call SVs in short and long read data

Regenotype LR SVs in a short read data set

Exercises

- Run manta on our SR data for chromosomes 1, 6, and 20

- Run minimap2 on chromosome 20

- Run samtools merge for LR data

- Run sniffles on merged LR bam

Before we really start

Super fun exercise

cd wget https://github.com/arq5x/bedtools2/releases/download/v2.31.0/bedtools.static chmod a+x bedtools.static mv bedtools.static miniconda3/envs/siw/bin/bedtools

First

We’ll make our output directory and change our current directory to it.

mkdir /workshop/output/sv

cd /workshop/output/sv

Manta

There are two steps to running manta on our data. First, we tell Manta what the

inputs are by running configManta.py.

Relevant files - don’t try to execute this, it is just to help you understand what files you need.

_BAM_ -> /data/alignment/combined/NA12878.dedup.bam _REF_ -> /data/alignment/references/GRCh38_1000genomes/GRCh38_full_analysis_set_plus_decoy_hla.fa

Run the following code blocks one at a time.

/software/manta-1.6.0.centos6_x86_64/bin/configManta.py \

--bam _BAM_ \

--referenceFasta _REF_ \

--runDir _OUT_

./_OUT_/runWorkflow.py \

--mode local \

--jobs 8 \

--memGb unlimited

Challenge

Run manta on NA12878’s parents (NA12891 and NA12892)

ls -lh _OUT_/results/variants/diploidSV.vcf.gz

-rw-r--r-- 1 student student 137K Aug 27 22:54 manta_NA12878/results/variants/diploidSV.vcf.gz

-rw-r--r-- 1 student student 141K Aug 27 23:04 manta_NA12891/results/variants/diploidSV.vcf.gz

-rw-rw-r-- 1 student student 141K Aug 27 23:07 manta_NA12892/results/variants/diploidSV.vcf.gz

Note - data compression

Storing the raw text of our analyses is very inefficient so we often compress our data using data compression programs (gzip, bgzip). This is comes at a cost of convenience though, we can’t use our “normal” tools (cat, less, grep) to access these files in the same way we couldn’t use less to read our BAM files yesterday. However, many tools come with an equivalent for compressed file usage - zcat, zless, zgrep.

Minimap2

Challenge

If we take the first 1000 lines of a fastq file how many reads do we get?

zcat /data/SV/long_read/inputs/NA12878_NRHG.chr20.fq.gz \

| head -n 20000 \

| minimap2 \

-ayYL \

--MD \

--cs \

-z 600,200 \

-x map-ont \

-t 8 \

-R "@RG\tID:NA12878\tSM:NA12878\tPL:ONT\tPU:PromethION" \

/data/alignment/references/GRCh38_1000genomes/GRCh38_full_analysis_set_plus_decoy_hla.mmi \

/dev/stdin \

| samtools \

sort \

-M \

-m 4G \

-O bam \

> NA12878.minimap2.bam

Flags

- Minimap

- -a : output in the SAM format

- -y : Copy input FASTA/Q comments to output

- -Y : use soft clipping for supplementary alignments

- -L : write CIGAR with >65535 ops at the CG tag

- –MD : output the MD tag

- –cs : output the cs tag

- -z : Z-drop score and inversion Z-drop score

- -x : preset

- -t : number of threads

- -R : SAM read group line

- Samtools

- -M : Use minimiser for clustering unaligned/unplaced reads

- -m : Set maximum memory per thread

- -O : Specify output format

Sniffles

sniffles \

--threads 8 \

--input /data/SV/long_read/minimap2/NA12878_NRHG.minimap2.chr1-6-20.bam \

--vcf NA12878_NRHG.sniffles.vcf

Regenotyping

Another super fun exercise

We need to make a copy of a file and change some of its contents.

cp /data/SV/inputs/NA12878.paragraph_manifest.txt . vi NA12878.paragraph_manifest.txtChange

/data/SV/bams/NA12878.chr1-6-20.bamto/data/alignment/combined/NA12878.dedup.bam.

cat

id path depth read length sex

NA12878 /data/alignment/combined/NA12878.dedup.bam 33.94 150 F

Relevant files - don’t try to execute this, it is just to help you understand what files you need.

_VCF_ -> /data/SV/inputs/HGSVC_NA12878.chr1-6-20.vcf.gz _MANIFEST_ -> ./NA12878.paragraph_manifest.txt _REF_ -> /data/alignment/references/GRCh38_1000genomes/GRCh38_full_analysis_set_plus_decoy_hla.fa

~/paragraph-v2.4a/bin/multigrmpy.py \

-i _VCF_ \

-m _MANIFEST_ \

-r _REF_ \

-o _OUT_ \

--threads 8 \

-M 400

Secondary challenges

Manta

How many variants do we call using manta?

Solution

zgrep -vc "^#"What is the breakdown by type?

Sniffles

How many variants do we call using sniffles?

Solution

zgrep -vc "^#" NA12878_NRHG.sniffles.vcf.gz4209What is the breakdown by type?

Solution

zgrep -v "^#" NA12878_NRHG.sniffles.vcf.gz | cut -f 8 | cut -f 2 -d ";" | sort | uniq -c11 SVTYPE=BND 1727 SVTYPE=DEL 1 SVTYPE=DUP 2464 SVTYPE=INS 6 SVTYPE=INVDiscussion

Key Points

Cancer Genomics

Overview

Teaching: 2h min

Exercises: 3h minQuestions

Objectives

Open your terminal

To start we will open a terminal.

- Go to the link given to you at the workshop

- Paste the notebook link next to your name into your browser

- Select “Terminal” from the “JupyterLab” launcher (or blue button with a plus in the upper left corner)

- After you have done this put up a green sticky not if you see a flashing box next to a

$

Slides

You can view Nico’s talk here

Generate somatic variant calls with Mutect2

Mutect2 is a somatic mutation caller in the Genome Analysis Toolkit (GATK), designed for detecting single-nucleotide variants (SNVs) and INDELs (insertions and deletions) in cancer genomes. The input for Mutect2 includes preprocessed alignment files (CRAM files). The CRAMs include sequencing reads that have been aligned to a reference genome, sorted, duplicate-marked, and base quality score recalibrated. Mutect2 can call variants from paired tumor and normal sample CRAM files, and also has a tumor-only mode. Mutect2 uses the matched normal to additionally exclude rare germline variation not captured by the germline resource and individual-specific artifacts.

Executing Mutect2

In this example Mutect2 will be run in a few intervals. This will speed up a minature test run of a Mutect2 command. In reality you might decide to restrict Mutect2 to the WGS calling regions recommended in the Mutect2 resource bundle.

# create a BED file of chr22 intervals to do a fast test run cat \ /data/cancer_genomics/allSitesVariant.bed \ | grep "^chr22" \ > ~/workspace/output/allSitesVariant.chr22.bed # run Mutect2 calling command gatk Mutect2 \ -R /data/cancer_genomics/GRCh38_full_analysis_set_plus_decoy_hla.fa \ --intervals ~/workspace/output/allSitesVariant.chr22.bed \ -I /data/cancer_genomics/COLO-829_2B.variantRegions.cram \ -I /data/cancer_genomics/COLO-829BL_1B.variantRegions.cram \ -O ~/workspace/output/COLO-829_2B--COLO-829_829BL_1B.mutect2.unfiltered.vcf

Going further with Mutect2 runs (this is a slower command not run in the workshop)

Real projects should be filtered for technical artifacts with a Panel of Normals (PON) and with common germline variants. The

--germline-resourceand--panel-of-normalsflags are used if Mutect2 is the tool filtering your somatic calls. In the example below the population allele frequency for the alleles that are not in the germline resource is 0.00003125. Read more in the Mutect2 documentation.gatk Mutect2 \ -R /data/cancer_genomics/GRCh38_full_analysis_set_plus_decoy_hla.fa \ -I /data/cancer_genomics/COLO-829_2B.variantRegions.cram \ -I /data/cancer_genomics/COLO-829BL_1B.variantRegions.cram \ --germline-resource AF_ONLY_GNOMAD.vcf.gz \ --af-of-alleles-not-in-resource 0.00003125 \ --panel-of-normals YOUR_PON.vcf.gz \ -O ~/workspace/output/COLO-829_2B--COLO-829_829BL_1B.mutect2.unfiltered.vcf

Filter somatic variant calls

After calling the variants, we filter out low-quality calls using the FilterMutectCalls tool which applies various filters like minimum variant-supporting read depth and mapping quality to distinguish true somatic mutations from artifacts. The full set of filters is described in the Mutect2 GitHub repository.

Filtering Mutect2 calls

gatk FilterMutectCalls \ --reference /data/cancer_genomics/GRCh38_full_analysis_set_plus_decoy_hla.fa \ -V ~/workspace/output/COLO-829_2B--COLO-829_829BL_1B.mutect2.unfiltered.vcf \ --stats ~/workspace/output/COLO-829_2B--COLO-829_829BL_1B.mutect2.unfiltered.vcf.stats \ -O ~/workspace/output/COLO-829_2B--COLO-829_829BL_1B.mutect2.vcf

Anatomy of a VCF file & bcftools

What is a VCF?

Variant Call Format (VCF) files are a widely used file format for representing genetic variation, with an official specification maintained by the Global Alliance for Genomics & Health (GA4GH). This organization also maintains the specifications for the SAM, BAM, and CRAM file formats. The VCF specification has evolved over time, and can now represent SNVs, INDELs, structural variants, and copy number variants, as well as any annotations. A large ecosystem of software exists for parsing and manipulating VCFs, the most useful of which is BCFtools.

BCFtools is maintained by the same folks as SAMtools, and shares a lot of underlying code (namely the HTSlib library). For most routine tasks, such as sorting, filtering, annotating, and summarizing, BCFtools provides utilities so you don’t have to worry about your own implementation. For more complicated queries and analysis, you may need to write your own code, or use a command line tool with more sophisticated expressions like vcfexpress.

Another commonly used file format for representing variants is the Mutation Annotation Format (MAF). This format’s origins lie in The Cancer Genome Atlas (TCGA) project. It’s only meant to represent SNVs and INDELs, and the specification is quite rigid with respect to the allowed annotations. However, the data is represented in a convenient tabular format and the maftools R package exists for easy plotting, analysis, and comparison to TCGA.

File structures